The Model Context Protocol (MCP) is an open standard designed to solve the chaotic ‘M×N integration problem’ developers face when connecting AI models to their tools and data sources. Think of it as a universal power adapter for AI. Instead of building a custom connector for every single combination, MCP provides a standard, unified way for everything to talk to each other. This breaks a huge bottleneck, reducing infrastructure setup time by an estimated 90% and freeing developers to build truly context-aware applications.

Why AI Needs a Universal Language

Right now, most AI models are like brilliant analysts stuck in a room with a handful of old, incomplete files. They have incredible reasoning power but are cut off from the real-time, relevant information that lives inside your company’s tools—places like GitHub , Slack , Jira , or your private databases. This forces developers into a messy integration nightmare.

Without a common standard, we’re all stuck in the “M×N problem.” If you have M different AI models and N different tools, you end up having to build and maintain M×N separate, fragile integrations. Every time you add a new model or tool, the complexity explodes. You’re constantly writing glue code, draining engineering resources, and slowing down any real innovation. It’s not just inefficient; it’s a completely unsustainable way to build.

The Problem with Custom Integrations

Patching everything together with one-off integrations creates a host of problems that kill progress and add unnecessary risk:

- Massive Development Overhead: Engineering teams waste countless hours writing, testing, and maintaining unique connectors for each tool, effectively re-solving the same problem over and over.

- Scalability Bottlenecks: As you add more models and data sources, the web of connections becomes a tangled mess, making it nearly impossible to adapt or bring in new tech without a major overhaul.

- Security Vulnerabilities: These custom-built connectors often lack standardized security measures, opening up potential weak points for data to be exposed.

- Poor Model Performance: Inconsistent data formatting and retrieval logic means you’re often feeding the model incomplete or irrelevant information. This is a primary cause of inaccurate outputs and hallucinations.

The Model Context Protocol was created as an open standard to fix this mess by tackling the ‘M×N problem’ directly. By offering a universal interface, MCP dramatically simplifies how you connect things. It’s such a fundamental improvement that major AI providers like OpenAI and Google DeepMind have already adopted it. This move has kicked off an explosion of community support, leading to over 1,000 open-source connectors and a rapidly growing ecosystem.

A Smarter Way to Provide Context

The Model Context Protocol (MCP) changes this tangled M×N problem into a clean, simple M+N equation. Instead of building a unique bridge for every single connection, you just build one compliant client for your AI application and one compliant server for each tool. Suddenly, everything becomes plug-and-play.

This elegant approach is the core idea behind Context Engineering, a practice focused on giving AI models the precise, relevant information they need to perform at their best. You can learn more about this foundational concept in our detailed guide on what Context Engineering is .

The table below breaks down just how different this approach is from the old way of doing things.

MCP vs Traditional AI Integrations

| Aspect | Traditional Integration | Model Context Protocol (MCP) |

|---|---|---|

| Effort | High; requires custom code for each model-tool pair (M×N). | Low; requires one client per app and one server per tool (M+N). |

| Scalability | Poor; complexity grows exponentially with new additions. | High; easy to add new models or tools without new integrations. |

| Maintenance | High; requires constant updates for multiple, brittle connectors. | Low; standardized components are easier to maintain and update. |

| Security | Inconsistent; security practices vary by integration. | Standardized; follows a unified, more secure protocol. |

| Ecosystem | Fragmented; relies on proprietary or one-off solutions. | Unified; fosters a large, open-source community of connectors. |

| Flexibility | Low; locked into specific model-tool pairings. | High; easily swap models or data sources without re-coding. |

Ultimately, MCP makes building sophisticated AI systems much more manageable and secure.

A platform built on MCP, like the Context Engineer MCP, acts as a centralized and secure gateway. It ensures that your AI model—whether it’s an AI coding assistant in your IDE or a custom agent—receives only the necessary context, exactly when it’s needed, without ever getting direct access to your sensitive data. This is the key to building smarter, safer, and more scalable AI applications.

Understanding the MCP Architecture

To really get why the Model Context Protocol (MCP) is such a big deal for AI development, you have to look at how it’s built. At its heart is a simple but incredibly effective client-server model. This setup creates a clean break between your AI applications and the scattered data they need to function, cutting through the integration mess that bogs down so many projects.

The whole thing is built on two key pieces:

- MCP Servers: Think of these as secure gateways to your data. Imagine a specialized librarian for each data source—one for your GitHub repos, another for your Slack channels, and maybe one for Jira tickets. Each server knows exactly how to securely fetch and format information from its assigned source.

- MCP Clients: These are embedded right into your AI tools, like a coding assistant or a custom automation script. The client’s job is simple: it figures out what context is needed and sends a request to the right MCP server. It’s the part that lets your AI “ask” for the information it needs to do its job.

This separation is what makes it all work. The AI application (the client) doesn’t have to get its hands dirty with authenticating to the GitHub API or parsing messy Slack conversations. All it needs to know is how to ask the right server for the right data.

The Request and Response Flow

The chatter between these two components follows a secure, predictable path. When your AI application hits a point where it needs outside information, the MCP client sends a standardized request to the appropriate MCP server. That server checks the request, grabs the data, tidies it up into a format the model can understand, and sends it back.

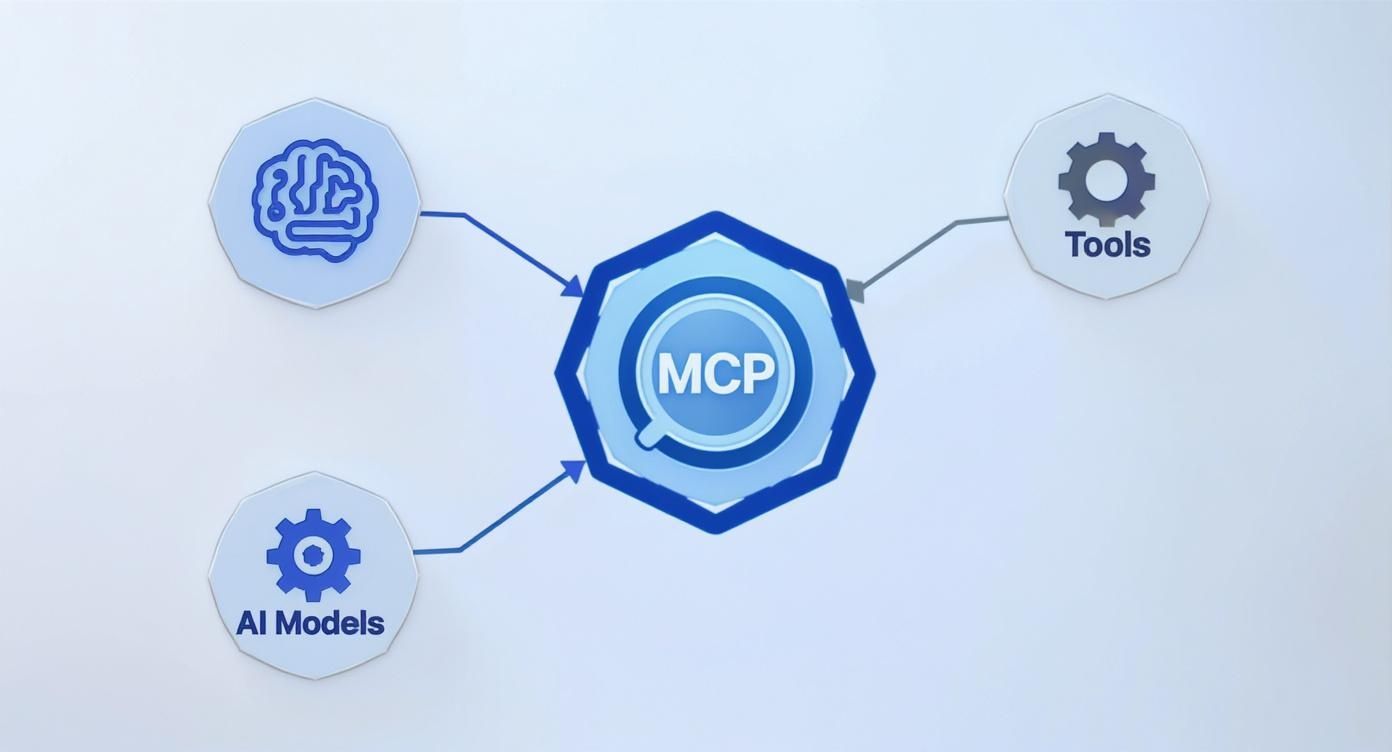

This infographic paints a great picture of MCP as a kind of universal adapter, plugging AI models into all sorts of tools and data sources without any fuss.

As you can see, the protocol acts as a central hub. It standardizes how everything talks to everything else, so you don’t have to build a custom bridge every time you want to connect a new model to a new tool.

This elegant request-and-response cycle makes sure the AI model gets exactly the context it needs, right when it needs it. For example, instead of force-feeding an entire codebase to a model, the client can just ask for the specific functions or class definitions related to a new feature. The difference in efficiency is massive.

Centralized Context Management

This design leads to a powerful idea: centralized context management. By funneling all data requests through MCP servers, you create a single, unified layer that controls how your AI interacts with the outside world. This solves a few huge problems all at once.

First, it brings consistency. Every AI tool gets its context from the same official sources, always formatted in the same way. This is a game-changer for cutting down on AI hallucinations, because models are far less likely to make things up when they’re fed reliable, up-to-date information. Research shows that providing accurate context can reduce model hallucinations by over 50%.

Centralized context management through an MCP server like the one provided by Context Engineer ensures that your AI tools always have access to the most current and relevant project information. This is how you move from unreliable, generic AI outputs to consistently accurate, production-ready results.

This centralized setup also makes security and governance a whole lot easier. Instead of juggling credentials and permissions across dozens of apps and custom connectors, you manage it all in one place: the MCP server. You can set specific rules about which apps can see what data, keeping your sensitive information locked down.

MCP does more than just simplify integrations. It also makes AI models more reproducible by ensuring all the necessary context—datasets, environment specs, and hyperparameters—is managed from a central point. This is what allows AI systems to access and act on the most relevant data, which in turn improves their ability to give context-aware answers. You can discover more about how MCP is shaping AI development and see its adoption across the industry. This focus on centralized, secure access is precisely why MCP has become so important for building serious, enterprise-grade AI applications.

Implementing MCP with Context Engineering

Knowing the theory behind the Model Context Protocol (MCP) is one thing, but actually putting it to work is where the magic happens. You could, of course, try to build your own MCP servers and clients from the ground up. But that road is paved with serious engineering overhead, ongoing maintenance, and tricky security puzzles. This is exactly why an enterprise-grade platform built on the MCP standard is so valuable.

The Context Engineer MCP platform offers a secure, scalable, and production-ready way to implement the protocol. It’s built to handle all the complex infrastructure for you, letting your developers skip the tedious setup and get straight to building context-aware AI features.

A Production-Ready Path to Adoption

Getting a solid MCP system running is about more than just spinning up a few servers. You have to wrestle with authentication, lock down data security, tune performance, and build custom connectors for every tool in your stack. This is the kind of heavy lifting a dedicated platform takes off your plate.

Instead of your team sinking weeks—or even months—into building and debugging a bespoke MCP infrastructure, a platform like Context Engineer gives you a proven solution that plugs right into your existing workflow. This completely changes the game, turning a massive infrastructure project into a straightforward integration.

By using a managed MCP implementation like the Context Engineer MCP, development teams can slash their infrastructure setup time by an estimated 90%. This frees up critical engineering talent to focus on building innovative, AI-powered features that actually move the needle for the business, rather than just managing the plumbing.

Beyond the Basics: An Enterprise-Grade Solution

A real enterprise solution needs to do more than just implement the basic protocol. The Context Engineer MCP extends the protocol’s power with features built specifically for professional development teams.

This screenshot of the Context Engineering interface gives you a sense of how it centralizes all your context sources into a single, clear dashboard.

The platform acts as a single pane of glass, letting you monitor and control how your AI applications pull information from essential tools like GitHub, Jira, and Slack.

Here’s what really sets a managed implementation apart:

- Pre-Built Connectors: You get instant, secure access to the most popular development tools without having to write a single line of custom integration code. This includes deep connections to code repositories, project management systems, and team communication platforms.

- Granular Access Controls: You can implement fine-grained security policies to control exactly which AI agents can access specific data sources. For example, you can set it up so an AI coding assistant can only see the relevant codebase, not sensitive conversations in your project management tool.

- Developer-Friendly Interface: Anyone on your team can manage all your context sources through an intuitive dashboard. It simplifies adding new tools, monitoring usage, and fixing connections, making the whole system easy to manage.

For a deeper look at how these pieces fit together, check out our guide on Context Engineering , which breaks down the core ideas behind building an effective context layer for your AI.

At the end of the day, opting for a platform like Context Engineer isn’t just about buying another tool. It’s about adopting a proven, secure, and efficient strategy for bringing the Model Context Protocol to life. It lets your team get back to what they do best: building great software.

Weaving MCP Into Your Daily Workflow

Talking about a new protocol can feel a bit theoretical. The real magic of the Model Context Protocol (MCP) happens when you plug it into the tools you already use every day. This isn’t about ripping out your current setup; it’s about making your favorite tools smarter and way more effective.

Let’s make this concrete. Imagine you’re trying to connect an AI coding assistant, like Cursor , to a private GitHub repository. This is a classic headache for developers. Most AI tools just can’t wrap their heads around a large, private codebase on their own.

Without MCP, your coding assistant is flying blind. It only sees the file you have open, forcing you to constantly copy and paste snippets to give it any useful context. But when you introduce an MCP server like the Context Engineer MCP to act as a bridge, the entire game changes.

Giving Your AI X-Ray Vision Into Your Codebase

Getting this set up is surprisingly straightforward. You just configure the MCP server to securely connect to your GitHub repo with an access token. Think of the server as a dedicated, hyper-organized librarian for your project.

Then, you point your AI coding assistant (the MCP client) to that server. That’s it. From that point on, any time you ask the AI to refactor code, hunt down a bug, or build a new feature, it gets the context it needs automatically.

Here’s what that looks like in action:

- You give a prompt: You ask your AI, “Refactor the

UserServiceto handle asynchronous profile updates and make sure it uses our standard error handling.” - The client asks for help: The assistant’s MCP client pings the MCP server, asking for everything related to

UserServiceand the project’s error patterns. - The server finds the goods: The server securely pulls the right class definitions, any related helper functions, and the established error handling modules from your GitHub repo.

- The AI gets the full picture: This hand-picked context is sent straight to the AI model. Now, it has exactly what it needs to understand your request within the unique architecture of your project.

The code you get back isn’t just correct—it’s consistent with your team’s established practices. You never had to leave your IDE to dig through files. Considering developers can spend up to 30% of their time just trying to understand existing code, MCP directly tackles that lost time and turns it back into productive work.

With a platform like the Context Engineer MCP, this whole setup takes a couple of minutes and doesn’t require a single change to your codebase. You’re essentially turning a generic coding assistant into a specialized team member who already knows your project inside and out.

Moving Beyond Code to Automate Project Management

The benefits of MCP don’t stop at your editor. Think about connecting your AI to other essential tools like Jira and Slack . An MCP-powered workflow can start automating the administrative grunt work that slows development down.

Here’s another powerful scenario where an AI agent uses MCP to connect the dots between communication and project management:

- From Slack to Jira: A product manager outlines a new feature in a Slack channel. An AI agent, listening in via an MCP server, can be prompted to turn that conversation into a detailed Jira ticket, complete with a summary and action items.

- Automated Sprint Summaries: At the end of a sprint, you can ask the AI to write a summary. Its MCP client will grab the list of completed tickets from Jira and the related pull requests from GitHub, then blend it all into a clean report for the sprint review.

- Instant Status Updates: When a manager asks, “What’s the status of the payment gateway integration?” in Slack, the AI can check the Jira ticket and recent GitHub commits to give an accurate, up-to-the-minute answer on the spot.

These examples show how a single context layer gets rid of all that manual copy-pasting and context-switching between tools. For a wider look at how different tools fit into this picture, check out our guide on the best AI tools for developers . By implementing MCP, you build a connected ecosystem where your AI can reason across your entire toolchain, giving your team a serious productivity boost.

Improving Performance and Data Privacy with MCP

Whenever developers consider a new protocol, two questions always come up: Does it make things faster? And does it keep our data safe? With the Model Context Protocol (MCP), the answer to both is a resounding yes. The protocol was designed from the ground up to boost performance and lock down privacy.

Let’s start with performance. In the world of AI, performance is all about token efficiency. Large language models think in “tokens,” and every single token costs you time and money. Shoving irrelevant data into a model’s context window is like renting a whole library just to read a single paragraph—it’s slow, expensive, and usually gives you worse answers.

MCP gets around this by being an intelligent filter. Instead of just dumping entire codebases or chat logs into the prompt, an MCP server sends only the pre-processed, highly relevant snippets needed to answer a specific question.

Maximizing Token Efficiency

This focused approach to context delivery directly impacts your bottom line. By cutting out the fluff and wasted tokens, you not only get faster responses from the model but also watch your API bills shrink.

Think about a common task, with and without MCP:

- Without MCP: A developer needs to ask about a function. To be safe, they paste the whole 2,000-token file into the AI’s context window. The model now has to dig through all that noise to find what matters.

- With MCP: The developer asks the same question. The MCP client intelligently requests only the relevant function definition and its immediate dependencies. The server sends back maybe 250 tokens of pure, relevant code.

In this simple example, you got the same (or better) result using 87.5% fewer tokens. Imagine that efficiency boost scaled across an entire engineering team. It quickly turns a costly AI experiment into a seriously valuable asset.

Fortifying Data Privacy by Design

Performance is great, but for any real business, data security is a dealbreaker. This is where a platform like the Context Engineer MCP really shows off what the protocol can do. The entire system is built to keep your sensitive information firmly under your control.

The MCP server acts as a secure gatekeeper. Your data—whether it’s source code, project plans, or internal documents—remains within your infrastructure. The AI model receives only the precise slice of context it needs for a query without ever getting direct access to your databases or code repositories.

This architecture is a massive security upgrade. It prevents the accidental exposure of private data and makes sure your intellectual property is never uploaded or stored on some third-party server. Your AI gets the context it needs to be helpful, and your security team can sleep at night knowing the data never left the building.

The Model Context Protocol has been quickly adopted by major players for this very reason. AWS , for instance, sees it as the standard for creating secure links between AI and private enterprise data. Companies like Anthropic and OpenAI have also pointed to MCP’s role in building more modular and scalable AI systems. Because the protocol works with any model and any server, it’s become the missing piece for connecting powerful AI to real-world business data. Learn more about how AWS is leveraging MCP to bridge this exact gap.

The Future of AI Development is Context-Aware

The Model Context Protocol isn’t just another small step forward for developers; it’s a genuine shift in how we think about building intelligent software. For too long, we’ve treated our AI models and our data sources as two separate worlds, trying to connect them with a tangled mess of one-off, custom-built integrations. MCP finally tears down that old, clunky approach, opening the door to a truly connected and scalable AI ecosystem.

This standard moves us away from a jumble of disconnected AI tools and into a world where any model can securely and effortlessly plug into any data source. Think of it as the architectural leap that finally fixes the chronic integration headache that has slowed down so much progress. Imagine a development world where the friction of connecting your AI to a new tool is basically zero.

A Strategic Advantage for Tomorrow

Getting on board with the Model Context Protocol now is a smart, strategic move to future-proof your applications. As AI models get more and more powerful, their hunger for high-quality, real-time context is only going to increase. Teams that build on a standardized foundation will innovate faster, while everyone else gets left behind, buried in a mountain of technical debt from their custom code.

Adopting this standard unlocks things that used to be impractical, if not flat-out impossible. For example, developers can now build agents that can reason across multiple, totally different systems—like GitHub , Jira , and Slack —to carry out complex tasks all on their own. This is the real difference between a simple chatbot and a true digital teammate.

A platform like the Context Engineer MCP is the on-ramp to this future. It gives you a production-ready implementation of the protocol, so your team can start building context-aware features right away without having to create all the heavy-duty infrastructure from scratch.

This approach massively shortens development cycles. Instead of burning weeks fighting with APIs and authentication protocols, your engineers can get back to what they do best: creating value. Giving AI models the precise, reliable context they need, right when they need it, is the secret to cutting down on hallucinations, boosting accuracy, and ultimately, shipping better software much faster.

Your Call to Action

The road ahead for AI development is clear, and it’s paved with context. The fragmented, brute-force integration methods we’ve been using are simply not going to cut it anymore. For developers who want to build the next generation of truly intelligent applications, the time to start exploring the Model Context Protocol is right now.

When you adopt MCP, you’re not just tidying up your architecture—you’re placing yourself at the leading edge of a major evolution in software development. Start exploring how this protocol can fit into your workflow and begin building AI systems that aren’t just powerful, but are genuinely aware of the world they operate in.

Got Questions About MCP? We’ve Got Answers.

When a new standard like the Model Context Protocol (MCP) comes along, it’s natural to have questions. Let’s break down some of the most common ones developers ask about getting started, security, and how MCP stacks up against the methods you’re already using.

How Is MCP Different From a Standard API Integration?

Think of a standard API integration as a custom, point-to-point connection. It’s like soldering a specific wire between two components—it works perfectly for that one pair, but it’s a brittle solution. If you want to swap out the AI model or change your data source, you’re back to the drawing board, re-engineering the whole connection.

The Model Context Protocol, on the other hand, is more like creating a universal standard like USB-C. It completely decouples the AI model from the data source. This means any MCP-compliant model can connect to any MCP-compliant tool without you having to write new code for every single combination. It turns a messy M×N integration problem into a simple M+N setup.

Can I Use MCP with Any Large Language Model?

Yes, that’s the whole point. MCP is model-agnostic by design. It simply defines a standard for how context is packaged and requested, which any LLM—from GPT-4 to Claude 3 —can then work with. The big AI labs have already gotten behind the standard, so you can expect broad compatibility with today’s best models.

The Context Engineer MCP platform makes this even easier by handling the connection details for you. Your team can swap models in and out as better ones are released, all without having to re-architect your data connections from scratch.

Does MCP Create Security Risks for My Private Data?

It’s actually the opposite. MCP was specifically designed to improve data security and privacy. When implemented correctly, your raw, sensitive data never has to leave your own infrastructure. The MCP server acts as a secure gatekeeper.

When an AI model requests information, the server fetches only the exact slice of data needed, processes it, and sends just that specific context to the model. The model never gets direct access to your entire database, codebase, or document library. This is a massive security upgrade compared to methods that require you to upload entire files or grant sweeping permissions to third-party services.

Ready to stop wrestling with integrations and start building truly context-aware AI? The Context Engineer MCP platform gives you a production-ready server that you can set up in minutes, giving your AI tools the precise context they need to shine.

Start building with better context today at contextengineering.ai