Retrieval Augmented Generation (RAG) architecture isn’t just a buzzword; it’s the backbone of practical AI, responsible for an industry projected to surge from $1.24 billion in 2024 to $67.42 billion by 2034. This approach bridges the gap between a Large Language Model’s (LLM) generalized knowledge and the specific, timely information your application needs to deliver trustworthy results.

Think of it as the framework that transforms a generalist AI into a focused, on-demand expert, powered by your data.

Why RAG Architecture is a Game-Changer for AI

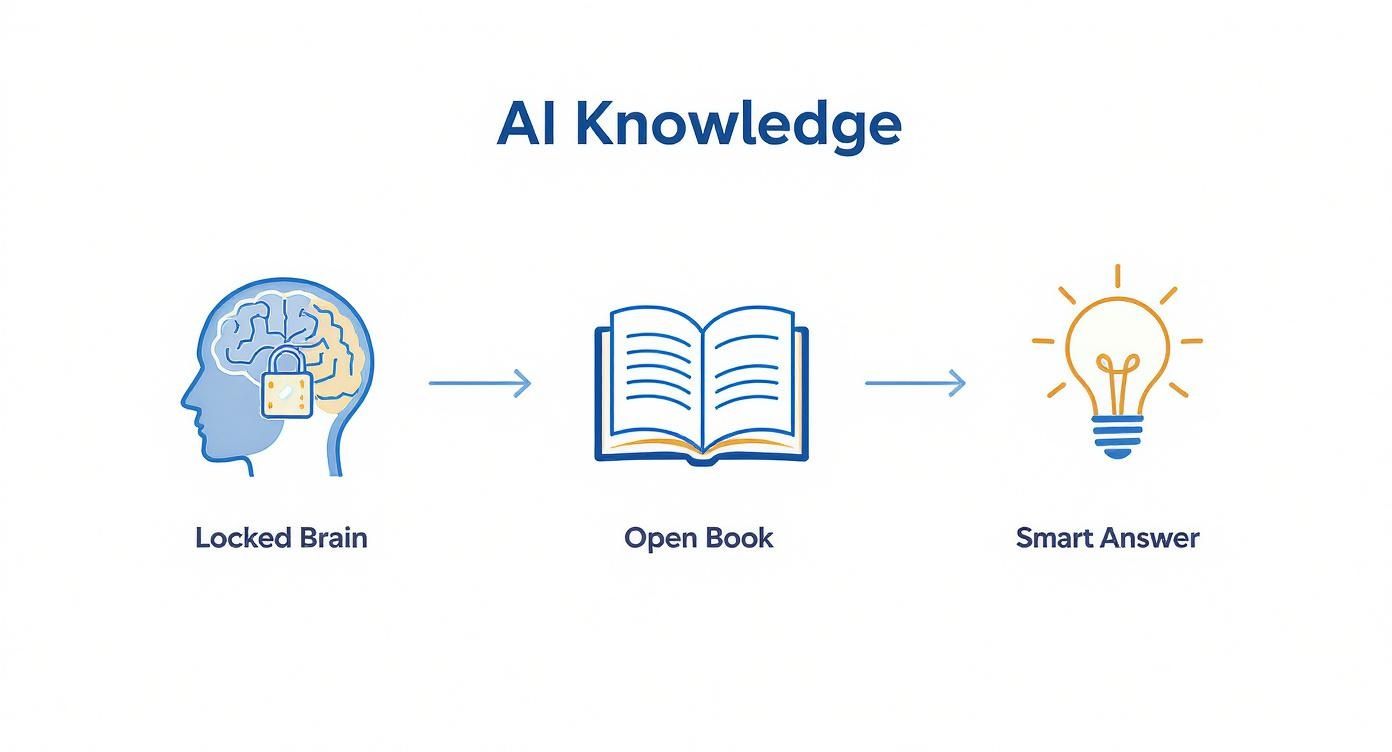

Let’s get straight to the point. A standard LLM operates like a brilliant student taking a closed-book exam. Its knowledge, while vast, is frozen at its training date. This creates two critical failures for real-world applications: outdated answers and a tendency to hallucinate—confidently inventing facts when faced with unknown topics. For any mission-critical system, that’s a non-starter.

In fact, studies show that up to 20% of ungrounded LLM responses can be completely fabricated, a risk most businesses cannot afford.

The “Open-Book Exam” Analogy

This is where Retrieval Augmented Generation (RAG) fundamentally changes the dynamic. RAG gives that brilliant student an open-book exam, complete with a curated, up-to-the-minute library of reference materials.

Instead of just relying on its static memory, the LLM can first retrieve relevant facts from your specific data sources—your documentation, your codebase, your support tickets—before formulating an answer. This simple but profound shift allows it to provide responses that are not only accurate but also verifiable, as you can trace the information back to its source.

A RAG-powered system fundamentally connects a model’s reasoning capability to a dynamic, external source of truth. This connection is the bridge between a knowledgeable AI and a genuinely useful one.

Before we go further, it’s helpful to see a direct comparison of how these two approaches differ.

Comparing a Standard LLM with a RAG-Powered LLM

This table highlights the fundamental differences in how standard LLMs and RAG-powered LLMs handle information to generate responses.

| Attribute | Standard LLM | RAG-Powered LLM |

|---|---|---|

| Knowledge Source | Static, internal training data | Dynamic, external data sources + internal data |

| Data Freshness | Outdated (frozen at training time) | Real-time and up-to-date |

| Accuracy | Prone to “hallucinations” on unknown topics | High-fidelity, grounded in retrieved facts |

| Transparency | Opaque “black box” | Verifiable with citations to source documents |

| Customization | Requires expensive fine-tuning | Easily customized with new data sources |

As the table shows, RAG makes the model far more reliable and adaptable. However, just giving the LLM access to a library isn’t the whole story. Dumping a truckload of irrelevant documents on its desk would be just as confusing as giving it nothing. This is where the discipline of Context Engineering comes in.

The Role of the Expert Librarian

If RAG provides the library, Context Engineering is the skill of being the expert librarian. It’s the art and science of finding, refining, and organizing the exact information the LLM needs to answer a specific question.

An expert librarian doesn’t just wave you toward a general section; they pull the right book off the shelf and point you to the precise page. In the same way, great context engineering ensures the information fed to the LLM is:

-

Relevant: Directly answers the user’s question without any extra fluff.

-

Concise: Provides just enough detail to be helpful, which also saves on token costs.

-

Accurate: Pulled from trusted, current sources to eliminate misinformation.

Mastering this is no longer a niche skill for AI developers—it’s the core competency for building high-performance, trustworthy AI systems. To see this in action, it’s worth exploring how emerging Content Intelligence Platforms are already helping organizations make sense of their vast data repositories. You can also see hard numbers on the measurable impact of context engineering on AI to understand how this approach delivers real results.

How a RAG System Actually Works

So, what’s really going on inside a RAG system? To get a real feel for it, you have to pop the hood and look at the engine. Think of it as a smart, three-step process for generating answers that are actually grounded in facts. Each step hands off to the next, turning a simple question into a rich, well-supported response.

This diagram gives you a great high-level view, showing how RAG unlocks a company’s internal knowledge and turns it into smart, useful answers.

As you can see, it’s all about moving from siloed, inaccessible data to an open, searchable format that an AI can use to come up with something genuinely insightful. Let’s walk through each stage.

Stage 1: The Indexing Process

Before a RAG system can answer a single question, it has to do its homework. This initial stage is called indexing, and the goal is to build a searchable knowledge library out of your data. It’s like creating a super-detailed digital index for a massive library, one a machine can read in an instant.

Here’s how that breaks down:

-

Loading Data: First, the system pulls in your raw data. This can be anything—text files, PDFs, Confluence pages, or even your entire codebase.

-

Chunking Data: An LLM can’t swallow a whole textbook at once; it has a limited attention span (the “context window”). So, the system strategically breaks the data down into smaller, digestible pieces called chunks. Getting this right is a huge part of good context engineering. Better chunks mean better answers.

-

Embedding Chunks: Each chunk is then run through a special AI model that converts the text into a string of numbers, known as a vector embedding. This vector is a mathematical fingerprint that captures the semantic meaning of the text, not just its keywords.

All these vectors get stored in a special kind of database called a vector store. Getting this part right is critical. If you want to go deeper, check out our guide on how to set up a vector store for context engineering .

Stage 2: The Retrieval Phase

With the knowledge library built and indexed, the system is ready for action. This phase fires up the second a user asks a question. This is where the RAG system acts like a lightning-fast research assistant, zipping through the library to find the most relevant snippets to help answer the user’s query.

Here’s the play-by-play:

-

First, the user’s question gets the same treatment as the data chunks—it’s converted into its own vector embedding using the same model. This ensures the question and the data are speaking the same mathematical language.

-

Then, the system performs a similarity search. It compares the question’s vector against all the vectors in the database, looking for the closest matches.

The concept here is pretty straightforward: if a chunk’s vector is mathematically close to the question’s vector, it’s a good bet that chunk contains relevant information.

This whole retrieval process is incredibly fast, often sifting through millions of documents in just milliseconds. What comes out is a ranked list of the most relevant data chunks, ready to be passed on to the final stage. This is where a high-quality retrieval augmented generation architecture really makes a difference—it makes sure only the best information gets sent to the LLM.

Stage 3: The Generation Step

Finally, we get to the generation step. This is where the LLM gets its moment to shine. It takes the original question and the handful of relevant chunks we just found and weaves them together into a coherent, natural-sounding answer.

Without this retrieved context, the LLM would just be guessing based on its old, generic training data.

Instead, the system builds a brand new, supercharged prompt for the LLM that includes:

-

The original user question.

-

The top-ranked, relevant data chunks from the vector store.

This detailed prompt gives the LLM everything it needs. Its job is no longer to remember facts but to synthesize an answer based only on the fresh context it was just given. This is the key to drastically reducing hallucinations and making sure the final answer is actually backed by your source data. The result is an accurate, context-aware answer that truly addresses what the user asked, often with citations pointing right back to the original documents.

The Art and Science of Context Engineering

A basic RAG setup is like a powerful engine—a great start, but useless without a skilled driver and a solid navigation system. That’s where Context Engineering comes in. Think of it as the art and science of meticulously shaping the information you feed to a Large Language Model (LLM), ensuring it gets the perfect fuel to generate exceptional answers.

Going beyond a simple RAG blueprint means adopting smarter ways to find information. Just matching keywords in a document often isn’t enough. To truly understand what a user is asking, you need a more sophisticated approach to unearth the best information buried in your data.

Refining Retrieval with Advanced Techniques

One of the most powerful methods is Hybrid Search. This technique blends the strengths of two distinct retrieval methods to get the best of both worlds.

-

Keyword Search (like BM25): This is your classic, go-to search for matching specific terms, acronyms, or product codes. It’s incredibly fast and precise when the exact words matter.

-

Semantic Search (Vector Search): This method is all about understanding the meaning behind a query. It can find conceptually similar information even if the keywords don’t match at all.

When you combine them, your system becomes much smarter. A query for “RAG token limits” will not only find documents with that exact phrase but also surface related content about “context window optimization” or “LLM input constraints.” This dual approach gives your retrieval relevance a serious boost.

Another crucial layer to add is a reranker. Think of it as a final quality check. After your initial search pulls a list of potentially relevant documents, a reranker model takes a closer look. It re-evaluates and re-orders the documents based on the original query, pushing the most valuable snippets to the top and weeding out near-misses. This step is essential for cutting down the noise before anything gets sent to the LLM.

Optimizing the Context Before Generation

Finding the right data is only half the battle. What you do with it next is just as important. Simply mashing raw chunks of documents together is sloppy, inefficient, and can easily overwhelm the model’s context window. This leads to poor performance and unnecessarily high costs.

This is where the principles of Context Engineering really make a difference. The goal is to distill all that retrieved information into a concise, potent package for the LLM. Some common techniques include:

-

Summarization: For long, dense documents, a smaller model can create a quick summary, pulling out only the key points needed to answer the user’s question.

-

Noise Filtering: You can actively strip out redundant phrases, boilerplate text, or irrelevant sections that just add tokens without adding any real value.

-

Structuring Data: Sometimes, it’s helpful to convert messy, unstructured text into a clean format like JSON or a markdown table. This can make it much easier for the LLM to process and reason about the information.

It’s important to note this is different from just writing a good prompt. To see a full breakdown, check out our comparison of Context Engineering vs. Prompt Engineering . One is about perfecting the data you send, while the other is about perfecting the instruction.

The Case for a Dedicated Middleware Layer

As you start adding these advanced retrieval and optimization layers, a new problem pops up: where do you put all this code? If you stuff it directly into your main application, you end up with a tangled, monolithic mess that’s a nightmare to maintain, update, and scale.

This is precisely why a dedicated middleware layer is a game-changer for any serious retrieval augmented generation architecture.

A Context Engineering MCP (Model-Controller-Presenter) server acts as a centralized, intelligent hub for everything related to context. It neatly decouples the messy complexity of data retrieval and context shaping from your core application logic, giving you a clean separation of concerns.

The table below highlights the practical differences between building everything into one application and using a dedicated server.

Different Approaches to RAG Implementation

| Aspect | Monolithic RAG Implementation | Decoupled with Context Engineering MCP |

|---|---|---|

| Complexity | High. Retrieval, optimization, and business logic are tangled together. | Low. Application logic is separate from context management. |

| Maintenance | Difficult. A change in retrieval strategy requires an app redeployment. | Easy. Update context logic on the server without touching the client app. |

| Scalability | Challenging. The entire application must be scaled together. | Flexible. Scale the context server and application independently. |

| Reusability | Limited. RAG logic is tied to one application. | High. Multiple apps or agents can share the same context server. |

| Security | Complex. Data access rules are mixed with application code. | Centralized. Enforce privacy and access rules in one secure place. |

Ultimately, the decoupled approach just makes more sense as your system grows. Your application can focus on what it does best, while the MCP server handles the heavy lifting of preparing the perfect context.

Using a dedicated server, like the one from ContextEngineering.ai, brings several powerful advantages. It can manage connections to all your different data sources—vector databases, SQL databases, APIs—and orchestrate complex retrieval chains. More importantly, it gives you a central control point for enforcing privacy rules, maintaining conversational state, and ensuring continuity across user interactions. It’s the professional way to build robust, scalable, and secure RAG systems.

Real-World RAG Workflows for Developers

Theory is great for a blueprint, but seeing how things work in the real world is where the rubber really meets the road. Let’s step away from the diagrams and walk through how a retrieval augmented generation architecture actually comes together for two very different developer use cases.

This journey will shine a light on the constant tug-of-war between cost, speed, and accuracy that every developer wrestles with when building with AI. You’ll also see how thinking about your architecture upfront can make everything that follows so much smoother.

Scenario 1: The Indie Developer’s Documentation Bot

First up is Alex, an indie dev with a popular open-source library. The goal is straightforward: build a chatbot to answer questions about the documentation. This would hopefully cut down on all the repetitive issues being filed on GitHub. Alex needs something lean, cheap, and easy to keep running.

The Workflow:

-

Data Source Selection: Alex keeps it simple. The knowledge base is just a collection of Markdown files from the project’s

/docsfolder, plus the important parts of theREADME.md. The data is clean, well-structured, and laser-focused on one topic. -

Indexing and Embedding: To keep the budget at zero, Alex opts for a free, open-source sentence-transformer model to create the embeddings. A quick Python script handles the job, running just once during deployment. It chops up the Markdown files and stuffs the resulting vectors into a local ChromaDB instance—a perfect lightweight choice for a small project.

-

Retrieval and Generation: The chatbot’s backend is a no-frills server. A user asks a question, the server embeds it, pulls the top three most relevant text chunks from ChromaDB, and shoots them over to a budget-friendly LLM API.

-

The Trade-Off: This setup is incredibly cheap and fast to get off the ground. But there’s a catch. Every time the documentation gets an update, Alex has to remember to manually re-run the indexing script. On top of that, all the logic for fetching, formatting context, and calling the LLM is tangled up in the main chatbot code.

This monolithic design is fine for a single-purpose tool, but it’s a classic case of building up technical debt. What if Alex wanted to build another agent to help with code generation? They’d have to copy and paste the entire RAG pipeline and start tweaking it all over again.

Scenario 2: The Startup’s Internal Knowledge Assistant

Now, let’s switch gears to a growing startup’s engineering team. They need a heavy-duty internal assistant that can pull answers from multiple, ever-changing sources to tackle complex questions. For them, reliability, security, and the ability to scale are non-negotiable.

The assistant needs to tap into:

-

Confluence: For all the project specs and meeting notes.

-

Jira: To check ticket statuses and read developer comments.

-

A Private GitHub Repo: To access the very latest code.

A More Sophisticated Workflow

This situation calls for a more professional approach. Trying to bake all those data connections, retrieval rules, and security checks into every single AI agent would quickly become a nightmare to manage. The team wisely decides to go with a decoupled architecture, using a Context Engineering MCP server as the backbone.

The Decoupled Workflow:

-

Centralized Data Management: The team points the Context Engineering server at their data sources. The server takes care of the messy details—handling authentication, syncing data periodically, and just making sure everything is up-to-date. This completely abstracts the data plumbing away from the developers who are actually building the assistant.

-

Advanced Retrieval: Inside the server, they configure a hybrid search strategy. This gives them the best of both worlds. The system can find a specific Jira ticket like

PROJ-123(keyword search) just as easily as it can understand a fuzzy, conceptual query about “backend refactoring plans” (semantic search). -

Secure Context Delivery: When a developer uses the assistant, their query hits the application, which in turn asks the MCP server for context. The server fetches the relevant info, applies access controls (so a junior dev can’t accidentally pull up sensitive HR docs), and assembles a tight, optimized context packet. This secure bundle of information is then sent back to the assistant.

-

Intelligent Generation: The assistant takes the user’s original question and forwards it—along with the clean, verified context from the server—to the LLM. And because the server is also handling conversational history, the assistant can maintain long, stateful conversations without the app itself having to juggle memory.

This approach completely changes the game for the development team. They’re no longer getting bogged down building data connectors or fine-tuning retrieval logic. Instead, they can pour all their energy into creating a fantastic user experience, confident that the heavy lifting of context engineering is being managed by a dedicated, secure, and reusable service. That’s the core idea behind a modern, scalable retrieval augmented generation architecture.

Getting Your RAG System Ready for the Big Leagues

Taking a Retrieval Augmented Generation (RAG) system from a cool proof-of-concept to a reliable, enterprise-wide tool is a whole different ball game. When you’re talking about enterprise scale, the conversation shifts. Suddenly, it’s all about scalability, rock-solid data security, and navigating a minefield of compliance rules. The choices you make here will stick with you for a long time.

Before you go all-in, it’s a good idea to brush up on the fundamental principles of scalable software architecture . This grounding will help you navigate one of the first major decisions you’ll face: where is this thing going to live?

Cloud vs. On-Premises: Picking Your Home Turf

Deciding between the cloud and your own servers isn’t just about hardware—it’s a strategic move that defines how you’ll manage your entire enterprise RAG setup.

-

Going with the Cloud: This route gives you incredible flexibility. Need more power? Just spin up more resources. Cloud providers offer a ton of managed services that take the headache out of maintenance and keep initial costs down. It’s perfect for teams that need to move fast and handle fluctuating demand without buying a room full of servers.

-

Keeping it On-Premises: This is all about control. For industries like finance, healthcare, or government, keeping sensitive data inside your own four walls isn’t just a preference; it’s often a legal requirement. When data sovereignty and strict regulations are in play, on-prem is often the only real choice.

The market reflects this reality. Right now, cloud setups are the most popular because they’re cost-effective and easy to scale. But the on-prem market is catching up fast as more companies put security and compliance first. And while big corporations currently lead the pack in adoption, it’s the smaller, more agile businesses that are expected to really push things forward. You can dig deeper into these industry trends and forecasts for RAG adoption .

Building a System That Protects Your Data

No matter where you deploy, a smart retrieval augmented generation architecture is your best defense for protecting privacy. The trick is to create clear separations between the different parts of your system.

By decoupling context management from your application and the LLM, you create a critical checkpoint where security policies can be rigorously applied.

This is where a dedicated middleware layer, like a Context Engineering MCP server, really shines. It acts as a security guard, making sure every piece of data passes a strict inspection before it ever gets sent to a third-party model.

This kind of setup lets you:

-

Mask Sensitive Data: Automatically find and scrub personally identifiable information (PII)—think names, social security numbers, or financial details—from the context.

-

Enforce Access Controls: Make sure users only see the data they’re actually supposed to see, based on their role and permissions.

-

Create Audit Trails: Keep a detailed log of every query and every piece of retrieved information for compliance and security reviews.

By putting all these security functions in one place, you can tap into the power of third-party LLMs without worry. Your sensitive company and customer data gets processed and sanitized within your own secure perimeter. Only the clean, safe context ever leaves your control. It’s a hybrid approach that offers the best of both worlds: the raw power of massive AI models and the peace of mind that comes with an ironclad, on-premises security posture.

Where Context-Aware AI is Headed

Retrieval Augmented Generation isn’t just another technique—it’s a fundamental shift in how we build intelligent, dependable AI. The future isn’t about training ever-larger models that just memorize more data. It’s about creating systems that can reason and act on information that’s fresh, relevant, and verifiable.

This shift is opening up a whole new world of context-aware applications. We’re already seeing the next wave of innovation build on the core ideas of RAG.

Agentic and Multi-Modal RAG: The Next Frontier

Two big trends are really starting to stand out:

-

Agentic RAG: This is a huge leap from simple question-and-answer bots. With agentic RAG, systems can tackle multi-step tasks, think through complex problems, and even use other tools and APIs to get a job done. Think of an AI that doesn’t just answer a question, but completes a workflow.

-

Multi-modal RAG: Why stop at text? The next generation of RAG systems can pull in and understand information from images, audio clips, and even video. This gives the AI a much richer, more complete picture of the world, just like we have.

These advancements are fueling an explosion of new uses. We’re seeing everything from smarter document search and summarization to recommendation engines that really get you, and automated customer support that doesn’t feel robotic.

As RAG architectures get better, they’re helping AI deliver more accurate, relevant answers in fields like healthcare, finance, and retail. This isn’t just a niche trend; it’s a core technology for the future, a point driven home by this market analysis from Grandview Research.

As AI becomes more woven into how we work, grounding it in verifiable, real-world data isn’t just a nice-to-have—it’s a necessity.

Getting a handle on retrieval augmented generation architecture context engineering is no longer just a technical edge. It’s now a crucial skill for anyone looking to build the next generation of genuinely helpful AI. It’s the foundation of the fast-growing context-aware economy.

Got Questions? We’ve Got Answers

We’ve covered a lot of ground, from RAG architecture to the nitty-gritty of context engineering. Let’s tackle some of the most common questions that pop up when developers start building with this tech.

What’s the Toughest Nut to Crack When Building a RAG System?

Hands down, the hardest part is getting the retrieval step right. It’s one thing to pull some information from your data store, but it’s a completely different challenge to consistently pull the perfect piece of context for any given query. This is where the real work lies—fine-tuning your chunking strategy, picking the right embedding model, and perfecting your search logic.

If your RAG system isn’t performing well, poor retrieval is almost always the culprit. Feeding the LLM noisy or irrelevant context is a direct path to bad answers and hallucinations. This is precisely why a disciplined approach to retrieval augmented generation architecture context engineering is non-negotiable.

How Does Context Engineering Actually Help with Data Privacy?

It gives you a dedicated control point over your data flow. When you manage context through a separate middleware layer, you can enforce specific rules to filter, mask, or even completely remove sensitive information before it ever touches an external LLM.

Think of it this way: your confidential customer data or internal company secrets never have to leave your secure perimeter. You get all the power of a cloud-based LLM without compromising on strict privacy and compliance requirements.

Can RAG Tap into Live Data Sources, Like an API?

Absolutely. This is where RAG really starts to shine. Instead of just querying a static vector database, you can design your retriever to make a live API call. Imagine it fetching real-time stock prices or checking the current inventory levels from your e-commerce platform.

A dedicated Context Engineering server is perfect for this kind of setup. It acts as an orchestrator, managing connections to all your different data sources—vector stores, databases, and live APIs—all from one central place. It turns a potentially chaotic process into a manageable, coherent workflow.

Ready to build more reliable AI applications with less effort? Context Engineering provides the dedicated server you need to manage context, ensure privacy, and eliminate hallucinations. Learn more and get started at https://contextengineering.ai .