In 2024, mastering AI prompt engineering isn’t just a technical skill—it’s a core business competency. It’s the art of conversation with a super-intelligent, but very literal, assistant. The difference between a vague question and a detailed, context-rich instruction can increase the value of an AI’s output by over 200%. It’s not just about what you ask; it’s about how you ask. This skill transforms a simple AI tool into a powerful partner for creative, analytical, and technical tasks.

What Is AI Prompt Engineering Really?

Imagine trying to explain a complex task to a new team member. You wouldn’t just give them a one-line command. You’d provide background, examples, and define what a successful outcome looks like. That’s a perfect analogy for AI prompt engineering. You’re learning to communicate with a powerful mind in the language it understands best.

This goes way beyond asking a simple question. You’re essentially building a creative brief for an incredibly smart but clueless collaborator. The quality of your input directly shapes the quality of your output. In fact, a carefully crafted prompt can boost the relevance of an AI’s response by over 50%.

From Simple Questions to Strategic Instructions

At its heart, prompt engineering is about leaving one-line commands behind. Instead of just saying, “Write about marketing,” a prompter engineers a far richer set of instructions.

This strategic approach blends several key elements:

- Context: Give the AI the background information it needs to understand the “why” behind your request.

- Role-Playing: Tell the AI who to be. For example, “Act as a seasoned financial analyst reviewing a quarterly report.”

- Formatting: Specify exactly how you want the output structured—a table, bullet points, or even JSON.

- Examples: Show, don’t just tell. Include a few examples of your desired output to guide the AI’s tone and style.

Think of it like this: A basic prompt is like asking a chef to “make some food.” An engineered prompt is like handing them a detailed recipe, a list of specific ingredients, and a photo of the finished dish. You know which one will get you a better meal.

Why Prompting Is Suddenly So Important

The demand for people with this skill has absolutely skyrocketed. The prompt engineering market is projected to grow from $0.85 billion in 2024 to over $32 billion by 2032, reflecting its critical role in business. As more companies weave AI into their daily work, the ability to talk to these systems effectively is a massive competitive edge.

This is especially true in technical fields like software development. Instead of getting generic, often buggy code snippets, developers can use detailed prompts to generate code that fits their project’s unique architecture. By providing context about their existing codebase, they get far more accurate and helpful results.

The next frontier is automating this context-gathering process with a Master Context Prompt (MCP), so the AI always has the right information without anyone needing to feed it manually. Platforms like Context Engineer are pioneering this approach, ensuring code generation is always informed by the complete project scope. Mastering AI prompt engineering is the first, most crucial step to unlocking that next level of productivity.

Why Better Prompts Drive Business Growth

In business, communicating clearly with AI isn’t just a neat trick—it’s a serious competitive advantage. When you get good at it, the difference is night and day.

Structured prompt engineering cuts through the noise and ambiguity, which means your innovation cycles get faster. Teams aren’t stuck going back and forth with the AI, trying to get the right output. They spend less time on frustrating revisions and more time actually getting work done.

Tangible Returns from Structured Prompting

Well-crafted prompts aren’t just about saving a few minutes here and there; they deliver measurable value that you can see in every department.

For instance, studies show teams that master their prompting report a staggering 340% higher ROI compared to those just winging it with basic queries. We’re also seeing operational costs drop by up to 30% on repetitive tasks that can be automated away. It just makes sense.

And when it comes to data analysis, better prompts lead to more accurate and consistent results with far fewer errors.

“Prompt engineering transforms AI tools into reliable partners, boosting efficiency and cutting rework.”

Suddenly, you’re not just saving money—you’re freeing up resources to pour back into strategic growth initiatives. It also turns out that one of the best ways to reduce those frustrating AI “hallucinations” is to simply give the model better, context-rich instructions.

Platforms like the Context Engineer MCP are built for this, ensuring the AI always has the right project context on hand. This kind of continuity saves teams an average of 2 hours per day that used to be lost to context-switching.

Here’s where it gets practical:

- Marketing Automation: Generate personalized campaigns that drive a 20% uplift in engagement.

- Operational Efficiency: Automate tedious report summaries, freeing up analysts to find deeper insights.

- Product Development: Use prompts to quickly prototype feature descriptions and test user flows.

Empower Teams with Context Protocols

It’s a bit shocking, but Gartner attributes 78% of AI project failures to poor communication between people and AI. This is where solid prompt engineering becomes your lifeline. As mentioned, successful teams can see roughly 340% higher ROI than those stuck with ad-hoc prompting.

The market has noticed, too. Since 2023, LinkedIn job postings mentioning prompt engineering have shot up by 434%. Professionals with these certified skills are commanding salaries about 27% higher than their peers, which tells you just how valuable this expertise has become. You can read the full research on prompt engineering’s impact over on CMSWire .

For software development teams, detailed prompts are a game-changer for streamlining code generation and integration. You can learn more about this in our guide on AI-powered software development .

When teams work together using a shared context platform, they cut down on the overhead of getting everyone (and the AI) on the same page. This keeps the output consistent, whether they’re iterating on marketing copy, analyzing data, or developing a new prototype. By spending less time on manual fixes, teams can get products launched faster and jump on market opportunities before they disappear.

Common Growth Drivers with AI Prompt Engineering

Businesses that get this right see real improvements in customer engagement, operational efficiency, and product innovation.

Here are a few key growth drivers unlocked by a focused prompt strategy:

- Tailored Marketing Content: Precise prompts generate targeted copy that can increase conversion rates by 25%.

- Scalable Data Insights: Automated data summaries help teams make decisions faster, cutting research time by 40%.

- Rapid Prototyping: Clear AI instructions can produce detailed mockups for user testing within hours, not weeks.

Each one of these shows how prompt engineering isn’t just a technical skill—it’s a catalyst for continuous improvement. Investing in training your team on this pays off again and again.

“Companies that master prompt engineering report higher innovation velocity and better alignment with strategic goals.”

This creative “conversation” with AI is what fuels faster experimentation. Your teams can test dozens of concepts in the time it used to take to draft a single outline. It also makes sophisticated AI tools accessible to non-technical staff, which sparks fresh ideas and gets different departments talking to each other.

Getting Started with Better Prompts

So, where do you begin? Start small. Define a clear objective every time you interact with an AI. What, exactly, do you want it to do?

From there, document common formats and the preferred tone of voice to keep everything consistent. Run a few small pilot projects to refine your prompts and measure the return on your effort. Once you find a formula that works, scale it across the organization.

By embedding prompt engineering into your daily workflows, you unlock AI’s full potential and set your business up for long-term success.

- Monitor AI outputs using key metrics like accuracy, relevance, and speed.

- Train your teams with hands-on workshops and create shared prompt libraries so everyone can learn from what works.

This isn’t a fleeting trend. Adopting solid prompt engineering practices now is how you secure a competitive edge and drive real, sustainable growth.

The Core Techniques: Getting Started with Effective Prompting

Getting great results from a Large Language Model (LLM) isn’t about finding some secret “magic word.” It’s about learning a few foundational principles that turn a vague thought into a crystal-clear instruction. Think of it as a practical toolkit—these core techniques will immediately level up the quality of your AI conversations.

I like to think of an AI as an incredibly talented but very literal intern. If you give them a five-second, ambiguous task, you can’t be surprised when you get a mediocre result. But if you provide clear direction, relevant context, and a few good examples, they can produce outstanding work. The same exact logic applies to prompt engineering.

Start with Role-Playing for Focus

One of the simplest yet most powerful techniques is to give the AI a role. This instantly narrows the model’s focus, helping it adopt the right tone, vocabulary, and perspective for the job. Instead of a generic, one-size-fits-all answer, you get a response from an “expert.”

A generic prompt might look like this: “Explain the benefits of a new software feature.” It’s okay, but it’s not great.

Now, let’s try it with role-playing:

“Act as a seasoned marketing manager. Your goal is to write three bullet points for a customer newsletter. Explain the benefits of our new analytics dashboard feature, focusing on how it saves time and improves decision-making for non-technical users.”

See the difference? This small change provides critical direction. You’ve gone from just asking a question to delegating a professional task, and the output will be far more targeted and useful.

Provide Clear Context and Constraints

LLMs don’t have your project’s background knowledge. Without it, they have to guess, which often leads to bland or irrelevant answers. Providing context is like giving the AI a map before you ask it for directions.

Here are some key things to include:

- Audience: Who is this for? (“Write this for an audience of software developers,” or “This is for a non-technical executive summary.”)

- Goal: What are you trying to achieve? (“The goal is to persuade a potential customer,” or “I need to simplify this complex topic.”)

- Constraints: Are there any guardrails? (“Do not use technical jargon,” or “Keep the entire response under 100 words.”)

When you define the boundaries, you guide the AI toward the exact solution you need. This saves you a ton of time on edits later.

Use Few-Shot Prompting with Examples

Sometimes, the best way to explain what you want is to just show it. That’s the core idea behind few-shot prompting. You give the AI a few examples of the input-output format you’re looking for before making your actual request. It’s a remarkably effective way to get a specific structure or style.

Let’s say you’re trying to generate product descriptions.

A weak prompt: “Write a product description for ‘Eco-Mug’.”

A strong few-shot prompt: “Here are some examples of our brand’s product descriptions: Example 1: Product: Solar Charger. Description: Power up on the go with our pocket-sized Solar Charger, your reliable partner for outdoor adventures. Example 2: Product: Smart Water Bottle. Description: Stay hydrated effortlessly with the Smart Water Bottle, tracking your intake with a gentle glow.

Now, following this pattern, write a product description for ‘Eco-Mug’.”

The AI immediately picks up on the pattern from your examples, making sure the new output matches your brand’s voice perfectly.

Guide Reasoning with Chain-of-Thought

For more complex problems that require logical steps, Chain-of-Thought (CoT) prompting is a game-changer. Instead of just asking for the final answer, you tell the AI to “think step-by-step” or to explain its reasoning. This simple instruction encourages the model to break the problem down into smaller, more manageable pieces, which dramatically improves its accuracy on logical and mathematical tasks.

Studies have shown that this technique can boost performance on complex reasoning tasks by a huge margin. It forces the AI to “show its work,” which also makes it much easier for you to spot and correct any logical missteps.

A quick look at the table below shows just how much these simple principles can improve your results.

How Minor Prompt Changes Create Major Impact

This table demonstrates how applying core prompting principles transforms a vague request into a precise instruction, leading to a significantly better AI output.

| Principle | Vague Prompt (Before) | Effective Prompt (After) |

|---|---|---|

| Role-Playing | “Write about marketing.” | “Act as a digital marketing strategist and create a 3-step plan to increase website traffic for a small coffee shop.” |

| Context | “Summarize this article.” | “Summarize this article for a busy CEO. The summary should be 3 bullet points, focusing only on financial implications.” |

| Few-Shot Prompting | “Generate a tagline.” | “Example 1: Nike - Just Do It. Example 2: Apple - Think Different. Now, generate a short, inspiring tagline for a new fitness app.” |

| Constraints | “Explain blockchain.” | “Explain blockchain to a 10-year-old in less than 75 words. Do not use the words ‘decentralized’ or ’ledger’.” |

As you can see, the “after” prompts aren’t just longer—they’re smarter. They provide the guardrails the AI needs to deliver exactly what you’re looking for on the first try.

For a deeper dive into these and other methods, feel free to explore our comprehensive guides to advanced LLM techniques .

Mastering these foundational skills is the first step toward building more sophisticated AI workflows. It’s about shifting your mindset from giving one-off commands to having a structured dialogue.

This is where the rubber meets the road. The real magic of prompt engineering isn’t just a theoretical exercise; it’s about solving actual business problems. When you learn to craft precise instructions, you turn a generalist AI into a specialist tool that can create real value.

Think about it. For a marketing team, this means whipping up dozens of ad copy variations for A/B testing in minutes, not days. For a financial analyst, it’s about taking a dense, 50-page report and boiling it down to a three-bullet summary for an executive. In every case, it’s about efficiency and getting better results, faster.

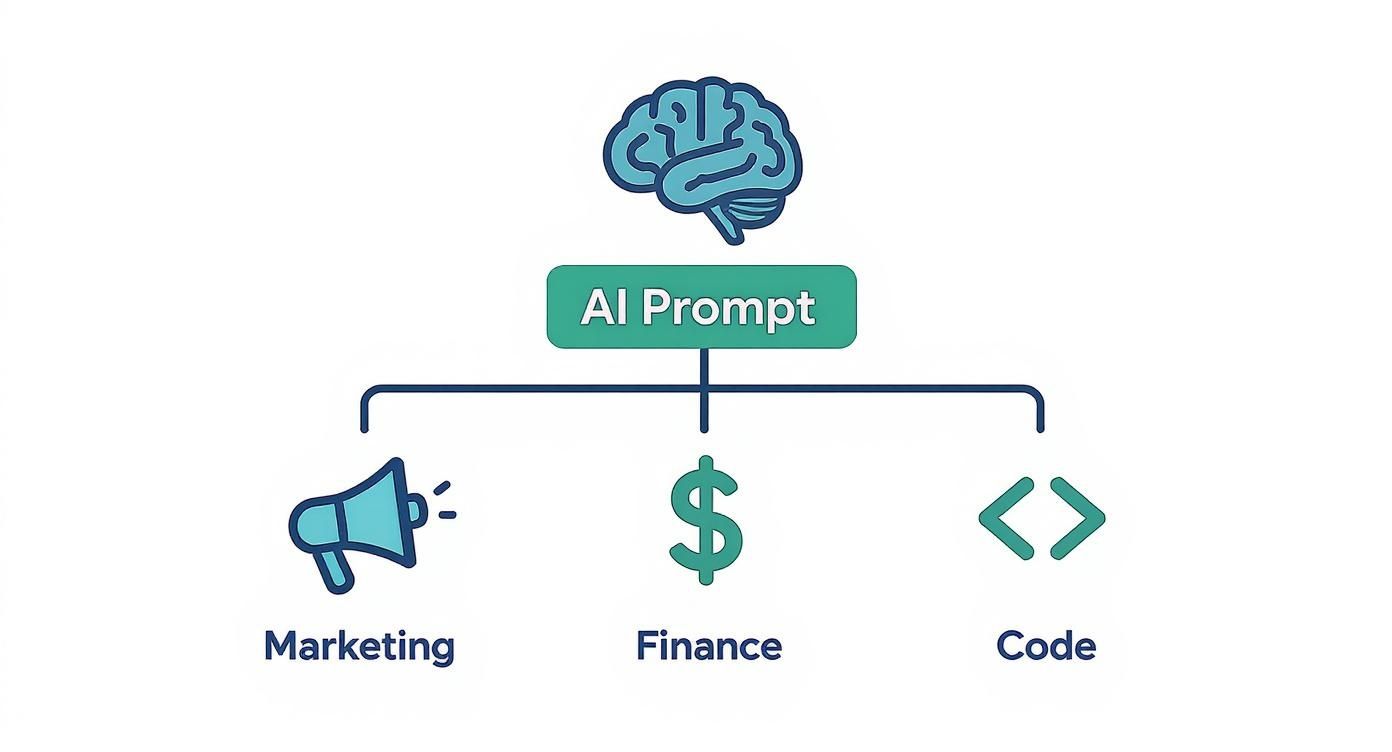

Driving Value in Marketing and Sales

In marketing and sales, the constant challenge is creating personal connections at a massive scale. Prompt engineering makes that possible. A well-constructed prompt can generate email campaigns tailored to specific customer groups, which can seriously move the needle on open rates and conversions.

For example, a rookie might ask an AI to “Write a promotional email.” An experienced marketer knows better. They’ll give a much more detailed instruction:

“Act as an expert email copywriter. Our audience is busy small business owners. Write a 150-word email for our new project management tool. The core message is that it saves them 10 hours a week. Keep the tone friendly and encouraging, and end with a clear call-to-action to start a free trial.”

See the difference? That level of detail gets you an output that’s already aligned with your campaign goals. It drastically cuts down on editing time and ensures your brand voice stays consistent.

Streamlining Finance and Analytics

The world of finance is built on data, but raw numbers don’t tell a story. Prompt engineering is the bridge between raw data and clear, actionable insights. An analyst can command an AI to sift through thousands of market data points to spot trends, flag risks, and identify opportunities, all presented in a clean table or a quick summary.

This is a game-changer for tasks like:

- Report Summarization: Turning long financial docs into need-to-know insights.

- Risk Assessment: Running preliminary risk analyses based on a set of rules.

- Market Trend Analysis: Spotting patterns in stock movements or economic data.

Ultimately, this lets financial pros spend less time buried in spreadsheets and more time on high-level strategy.

Accelerating Software Development

For developers, prompt engineering has become a massive productivity booster. You can use it to generate boilerplate code, write unit tests, or even get a plain-English explanation of a tricky algorithm. This is especially true in AI-native coding environments where giving the right context is everything.

A developer might prompt the AI with something like this:

“Using Python and Flask, write a function that takes a user ID and pulls the user’s profile from a PostgreSQL database. Make sure to include error handling in case the user isn’t found.”

When you get this right, the AI stops being a simple tool and starts feeling like a coding partner. This is where platforms like the Context Engineer MCP really shine, by automatically feeding the AI the project context it needs. The code it generates is far more likely to fit right into the existing architecture, turning a good idea into production-ready code in a fraction of the time.

The global prompt engineering market is booming, thanks to AI being adopted everywhere from healthcare and banking to retail and logistics. This explosive growth proves that prompt engineering is becoming a core skill for anyone looking to get the most out of AI in the real world. You can find more details on the prompt engineering market growth on Grandview Research .

Moving Beyond Prompts to Context Engineering

Getting good at writing a single, perfect prompt is a crucial skill, but it only gets you so far. The moment you need to scale AI across a whole team or company, that one-off approach starts to fall apart. This is where the craft evolves from basic AI prompt engineering into a more robust discipline called Context Engineering.

The big idea here is to stop having isolated, one-and-done conversations with an AI. Instead, you build a persistent, shared brain that informs every interaction. Think about it: rather than manually pasting your brand guidelines, technical specs, or key data into every single prompt, a system built on these principles gives the AI that background knowledge automatically.

The Trouble with Standalone Prompts

A great prompt can solve a specific problem, but it exists in a bubble. The next person who comes along—or even you, on a different day—has to start all over again. This creates some serious headaches when you’re trying to get real work done at scale.

You’ll quickly run into challenges like:

- Inconsistent Outputs: Different team members get wildly different results simply because they’re feeding the AI different bits of context.

- Wasted Time: So much time gets burned repeating the same background information in every prompt. In fact, some studies show this kind of rework can eat up 20% of a developer’s day.

- Risk of Errors: Every time someone manually enters context, there’s a chance for human error. That can lead to off-brand content or buggy code.

At its core, the problem is a lack of memory. Each new prompt is a clean slate, forcing the AI to relearn the rules of your project every single time. This is why a more systematic approach isn’t just a nice-to-have; it’s a necessity.

This infographic shows just how much AI depends on the right context across different parts of a business.

Whether it’s for marketing, finance, or software development, the AI is only as good as the foundational information it’s given.

Building a Centralized “System Brain”

Context Engineering tackles this problem head-on by creating a centralized “System Brain.” This acts as the single source of truth for the AI. Often, this takes the form of a Master Context Prompt (MCP), which is basically a detailed document holding all the essential, unchanging information about a project, product, or company.

An MCP might include things like:

- Brand Identity: Your specific voice, tone, style guides, and customer personas.

- Technical Architecture: Core frameworks, coding standards, and database schemas.

- Strategic Goals: Key business objectives, project milestones, and KPIs.

- Product Details: Feature lists, user stories, and how you stack up against competitors.

By plugging this MCP into their AI tools, teams can finally get outputs that are consistently on-brand, technically sound, and aligned with their goals. It’s the difference between telling an employee to complete a single task versus handing them the entire company handbook. To go deeper, you can learn about the fundamentals of Context Engineering in our dedicated article .

The True Path to AI Maturity

Making this shift is what separates casual AI dabblers from organizations that are truly getting value from AI at an enterprise level. It slashes rework, stamps out inconsistencies, and gives your team the confidence to take on much bigger, more complex problems.

Ultimately, while AI prompt engineering is the art of asking the right question, Context Engineering is the science of building a system that already knows most of the answers. It’s a proactive approach that turns your AI from a simple command-taker into a fully-briefed, knowledgeable partner in your workflow.

The Future Of Prompt And Context Engineering

Prompt and context engineering is more than a passing phase; it’s reshaping how we work and communicate with machines. As AI models grow more capable, the art of directing them with clarity will shift from a specialist’s tool to a basic skill for all knowledge workers.

We’re already seeing this change in marketing teams refining campaign messaging, product managers automating feature sprints, and legal departments drafting contracts. Each group relies on carefully crafted prompts to streamline its workload.

Economic trends back this shift. Market analysts expect prompt engineering to surge from $0.85 billion in 2024 to between $3.48 billion and $32.78 billion over the next decade. Some estimates even foresee it as a trillion-dollar segment. For a deeper dive, check out Market Research Future .

The real opportunity lies beyond crafting better one-off prompts. It’s about designing lasting frameworks for human–AI collaboration—systems that remember, adapt, and evolve.

The Rise Of Specialized AI Agents

Think of specialized AI agents like dedicated teammates. One agent handles customer inquiries end-to-end. Another translates product specs into tested code. A third monitors social media sentiment and flags emerging trends.

These agents need more than isolated commands. They depend on a steady flow of structured information. Here’s how advanced context management supports them:

- Master Context Prompt (MCP): Acts as the agent’s “System Brain,” storing project goals and style guides

- Continuous Updates: Feeds real-time data, from user feedback to bug reports

- Role-Based Filters: Ensures each agent focuses on its specific domain

With these components in place, agents make decisions that align with broader business objectives—without constant human checks.

The Evolution Of Prompt Optimization Tools

Prompt libraries and templates are just the beginning. The next wave of tools will use AI to refine prompts automatically. Imagine a platform that:

- Tracks how different prompt variations perform

- Suggests edits based on success metrics

- Adjusts language style and tone on the fly

A UX designer might start with a basic instruction set but let the tool fine-tune phrasing for clarity and engagement. Over time, the system learns which prompts drive the best responses for each task.

The goal is to have AI feel like a natural collaborator rather than a complex piece of software.

Architecting Human-AI Communication

A new role is emerging: the Context Architect. Part prompt engineer, part systems designer, part business strategist, these professionals build the pipelines of information flowing between teams and AI.

Their responsibilities include:

- Designing and updating shared knowledge bases

- Establishing version control for context prompts

- Defining ethical and quality guardrails

- Auditing AI outputs for consistency and accuracy

For example, a software development team using a tool like the Context Engineer MCP effectively has a Context Architect built into their workflow, centralizing technical specifications so that AI-generated code is 40% more likely to integrate seamlessly on the first try. Investing in these skills and platforms now puts you at the forefront of a shift that’s only beginning.

Your Questions, Answered

Let’s tackle some of the most common questions that come up when people start digging into prompt engineering.

Is Prompt Engineering a Real, Long-Term Career?

Yes, absolutely. While the AI models we use today will undoubtedly evolve, the fundamental skill won’t disappear. Think of it this way: someone has to be the bridge between what a business wants to achieve and how the AI actually does it.

This role is about translating human intention into machine-readable instructions. As AI becomes more integrated into everything we do, the demand for people who can do this effectively is only going to increase. It’s a solid career path for the foreseeable future.

Can I Use These Techniques With Any AI Model?

You bet. The core principles we’ve talked about—giving clear context, being specific, assigning a role, and defining the output format—are universal.

These methods work whether you’re using models from OpenAI , Google , Anthropic , or others. While each model has its own little quirks and personality, mastering these fundamentals will make you better at prompting on any platform.

A great prompt isn’t tied to a single tool; it’s a way of thinking that unlocks the potential of any AI you work with.

What’s the Difference Between Prompt and Context Engineering?

This is a great question, and the distinction is important. Prompt engineering is all about crafting the perfect, single instruction to get a specific task done right now.

Context engineering, on the other hand, is a much bigger-picture strategy. It’s about building a persistent, reliable knowledge base that the AI can draw from for all its interactions. This ensures every response is consistent, accurate, and aligned with the project’s goals.

Here’s a simple way to think about it:

- Prompt Engineering is like writing one really clear, effective email.

- Context Engineering is like creating the entire company handbook that makes sure every email anyone sends is accurate and on-brand.

It’s the difference between a one-off request and a truly intelligent, scalable system.

Ready to move from single prompts to a fully integrated system? Context Engineering offers a Master Context Protocol (MCP) server that connects directly to your IDE, eliminating hallucinations and ensuring your AI always has the complete project picture. Build complex software features faster and more reliably. Explore the future of AI-powered development at https://contextengineering.ai .