At its core, prompt engineering is the science of crafting precise instructions to get a desired output from an AI. Think of it as the difference between asking a talented chef to “make some food” versus giving them a detailed recipe. The latter consistently produces a masterpiece. This discipline isn’t about complex coding; it’s about strategic communication with Large Language Models (LLMs).

A well-engineered prompt can boost the accuracy and relevance of AI outputs by over 50%, according to recent industry benchmarks. This skill is no longer a niche trick—it’s a fundamental competency for leveraging AI effectively, turning a powerful but unpredictable tool into a reliable, high-performance asset.

Unlocking AI’s $4.4 Trillion Potential with Better Instructions

Generative AI is projected to add up to $4.4 trillion annually to the global economy. Unlocking that value depends entirely on our ability to give these models clear, context-rich instructions. An LLM like GPT-4 is a powerful engine, but a prompt is the steering wheel, accelerator, and GPS all in one. Without a skilled driver, the engine’s power is wasted.

This is the exact problem prompt engineering solves.

A vague request like “write about sales” yields a generic, unusable blog post. In contrast, a well-engineered prompt—one that specifies the target audience, tone, format, and key data points—delivers content that is targeted, relevant, and immediately valuable. Studies show that structured prompts can reduce “hallucinations” (factually incorrect outputs) by as much as 40%, a critical factor for professional use cases.

Why It’s More Than Just Asking Questions

Prompt engineering is a strategic discipline that combines logic, domain expertise, and creative communication. It requires understanding how an AI processes information and structuring your requests to align with its operational logic.

To achieve this, effective prompts must incorporate several key elements:

- Providing Rich Context: The AI needs the backstory. The more relevant data it has, the lower the chance of incorrect assumptions. Lack of context is the number one cause of poor AI performance.

- Defining a Specific Format: Need a JSON object for your application, a markdown table for a report, or a bulleted list for a presentation? Explicitly defining the output structure saves hours of manual reformatting.

- Assigning a Persona: Instructing the AI to act as a “cybersecurity expert with 20 years of experience” versus a “helpful IT assistant” fundamentally changes the depth, tone, and technical accuracy of its response.

Here’s a quick look at these core ideas to help you get started.

Prompt Engineering Core Concepts at a Glance

This table breaks down the fundamental ideas behind prompt engineering, giving you a snapshot of what goes into this essential skill.

| Concept | What It Really Means |

|---|---|

| Instruction | The direct command or task you want the AI to perform. Be clear and specific. |

| Context | The background information or data the AI needs to understand the request fully. |

| Persona | Assigning the AI a role or character (e.g., “Act as a helpful tutor”). |

| Format | Specifying the desired output structure (e.g., “in a bulleted list,” “as a JSON object”). |

| Examples (Few-Shot) | Providing examples of the input/output you want to guide the AI’s response. |

Understanding these components is your first step toward moving from basic questions to truly effective prompts.

“What will always matter is the quality of ideas and the understanding of what you want.” - Sam Altman, CEO of OpenAI

Ultimately, mastering prompt engineering is what elevates an AI from a novelty into an indispensable professional tool. It bridges the gap between human intent and machine execution. As the complexity of AI tasks grows, this has given rise to disciplines like context engineering , which focuses on systematically managing the massive amounts of information needed for reliable, enterprise-grade AI applications.

How We Learned to Talk to Machines

It’s easy to forget that communicating with computers was once a one-way street defined by rigid syntax. For decades, the primary interface was the command line, where a single misplaced semicolon could cause a catastrophic failure. In that world, humans were forced to learn the machine’s language.

This created a huge gap between what we wanted the machine to do and what we could actually tell it to do. We’ve been trying to bridge that gap ever since.

The ambition of conversing naturally with a computer isn’t new. This dream dates back to the dawn of AI research. In 1966, a program named ELIZA offered a glimpse of this future. It simulated a psychotherapist by using clever pattern-matching to rephrase a user’s statements as questions. While simplistic, ELIZA was a landmark achievement. It was the first proof that text prompts could guide a machine’s response. For a great look back at these early days, check out this historical overview of prompt engineering .

From Commands to Conversations

The evolution from strict commands to natural language conversations represents a monumental shift in human-computer interaction. For so long, we adapted to the machine’s limitations. Now, with the advent of Large Language Models (LLMs), the machine is finally learning to understand us.

This breakthrough was not a sudden event. It is the culmination of decades of incremental progress in key areas:

- Natural Language Processing (NLP): The entire field dedicated to teaching computers how to process, understand, and generate human language.

- Machine Learning: This innovation allowed computers to learn from massive datasets instead of requiring humans to code rules for every possible scenario.

- Exponential Growth in Computing Power: The sheer increase in processing capabilities provided the necessary horsepower to train the colossal AI models we have today.

These factors converged to create models that can interpret context, nuance, and intent in ways that were once purely the domain of science fiction.

The Rise of Prompting as a Discipline

As AI models grew more sophisticated, a critical insight emerged: the way you ask for something dramatically impacts the quality of the answer.

Ask a model like GPT-4 a lazy question, and you’ll get a lazy, generic answer back. But if you frame your request with care—providing context, examples, and clear constraints—you can get something incredibly insightful and accurate.

This is the moment prompt engineering became a real skill. It’s the art and science of crafting your words to get the best possible result out of an AI.

Knowing this backstory is key. Prompt engineering isn’t just a passing fad or a list of “hacks” for a new app. It’s the next logical step in our long journey to make technology work for us. It’s a point in history where clear communication has become just as valuable as coding, and it’s exactly why learning to structure your prompts is now essential.

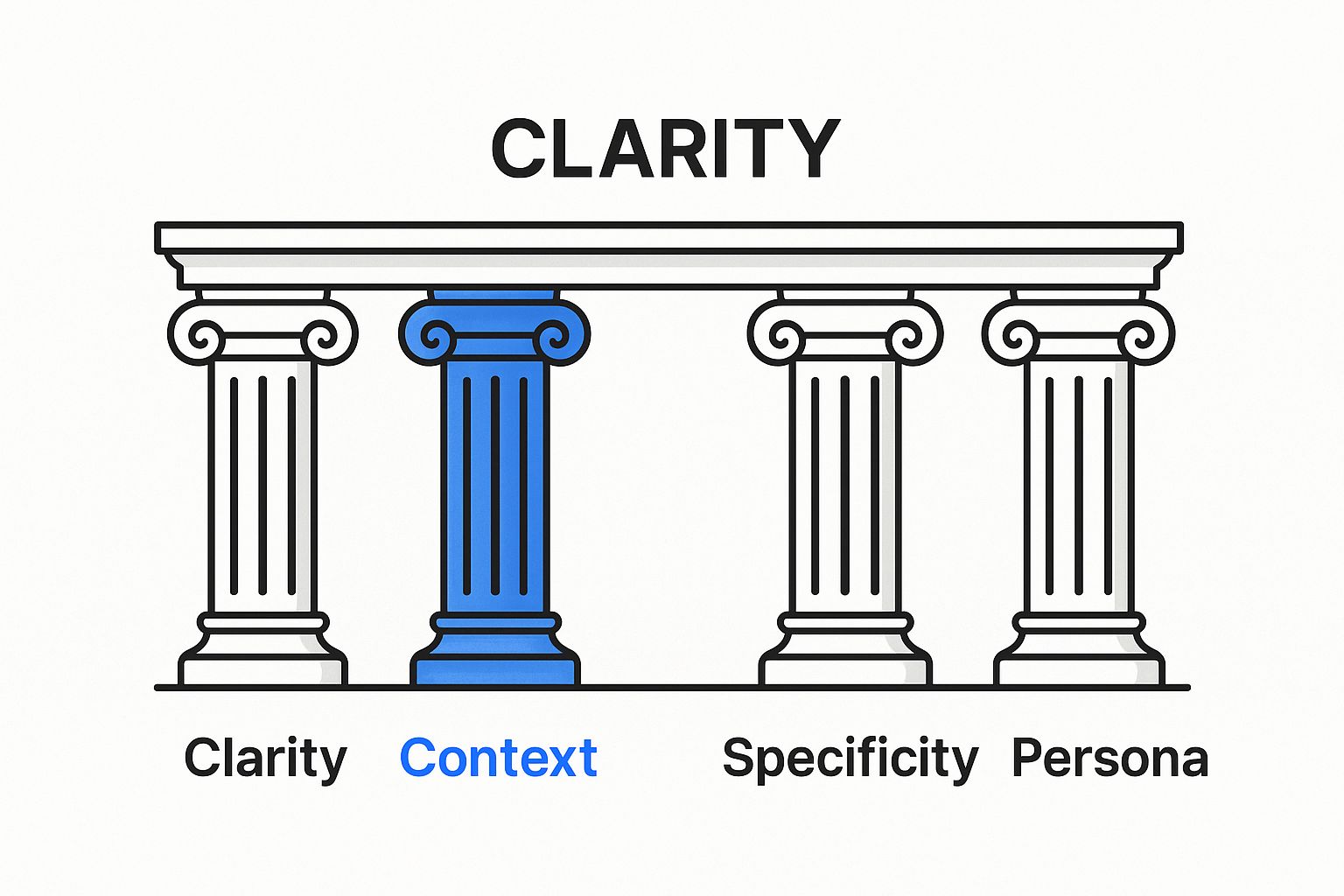

The Four Pillars of a Powerful Prompt

Ever wonder what separates a vague, hit-or-miss request from a prompt that delivers exactly what you need on the first try? It’s not magic; it’s all about structure. The most effective prompts are built on four key pillars that work together to guide the AI with absolute precision.

Think of these pillars—Clarity, Context, Specificity, and Persona—as the blueprint for a successful conversation with an AI.

When you get these four elements right, you stop guessing and start getting predictable, high-quality results. Let’s break down each one.

Pillar 1: Clarity

Clarity is your foundation. It’s all about using simple, direct language and cutting out any room for misinterpretation. If your instructions are fuzzy, the AI’s response will be too. It’s like giving directions: “Go that way” is useless, but “Head north on Main Street for two blocks” gets someone exactly where they need to go.

- Before (Vague): “Tell me about marketing.”

- After (Clear): “Explain the top three digital marketing strategies for a new e-commerce business selling handmade jewelry.”

See the difference? We’ve removed all the guesswork. The AI now knows the exact topic, the target audience, and how many points to cover.

Pillar 2: Context

Context is the background information that gives your request meaning and purpose. It’s like giving the AI a map and compass before sending it on a mission. Without it, the model is flying blind and has to make assumptions—which are often flat-out wrong.

This is especially true for complex tasks. For professional use cases like software development, insufficient context is the primary reason for flawed or “hallucinated” AI-generated code. Platforms like the Context Engineer MCP are built to solve this by automatically providing the AI with the relevant project files and dependencies, ensuring the generated code is accurate and fits within the existing architecture.

- Before (No Context): “Write a Python function.”

- After (With Context): “I’m building a web scraper using BeautifulSoup. Write a Python function named

extract_linksthat takes a URL as an argument and returns a list of all unique absolute URLs found on that page.”

Pillar 3: Specificity

Specificity is where you define the “how.” It’s you telling the AI exactly what you want the final result to look like. Do you need a bulleted list? A JSON object? A 500-word blog post written in a conversational tone? The more specific you are, the better.

One study actually found that prompts with specific formatting instructions improved the usability of the final output by over 40%. Why? Because the results needed far less manual editing. This pillar is a direct line to efficiency.

- Before (Not Specific): “Give me some ideas.”

- After (Specific): “Generate five catchy blog post titles about the benefits of remote work. Each title must be under 60 characters and formatted as a numbered list.”

Pillar 4: Persona

Finally, assigning a persona tells the AI who it should be. This is a powerful way to shape the tone, style, and vocabulary of the response. Asking an AI to respond as a seasoned financial advisor will give you a completely different answer than asking it to be a witty Gen Z social media manager.

- Before (No Persona): “Explain prompt engineering.”

- After (With Persona): “Act as an expert SEO content writer. Explain the concept of prompt engineering to a beginner audience, using clear analogies and a friendly, encouraging tone.”

Getting comfortable with these four pillars is what will take you from asking basic questions to crafting sophisticated instructions that truly unlock what these AI models can do.

Getting Hands-On: Prompting Techniques and Workflows That Actually Work

Knowing the theory behind a good prompt is one thing, but putting it into practice is where the real magic happens. This is the art and science of prompt engineering: moving from ideas to execution with specific techniques that steer an AI’s behavior. The aim isn’t just to get a good result once, but to build a repeatable process that delivers consistent, high-quality output every single time.

Don’t underestimate the impact of a structured approach. As of early 2024, around 67% of AI professionals said prompt engineering was a fundamental part of their job. More telling is the fact that well-crafted prompts can boost output accuracy by up to 30% compared to a simple, one-line request. That’s a huge saving in time and effort. If you’re curious, you can dig into more of these industry-wide prompt engineering stats to see the bigger picture.

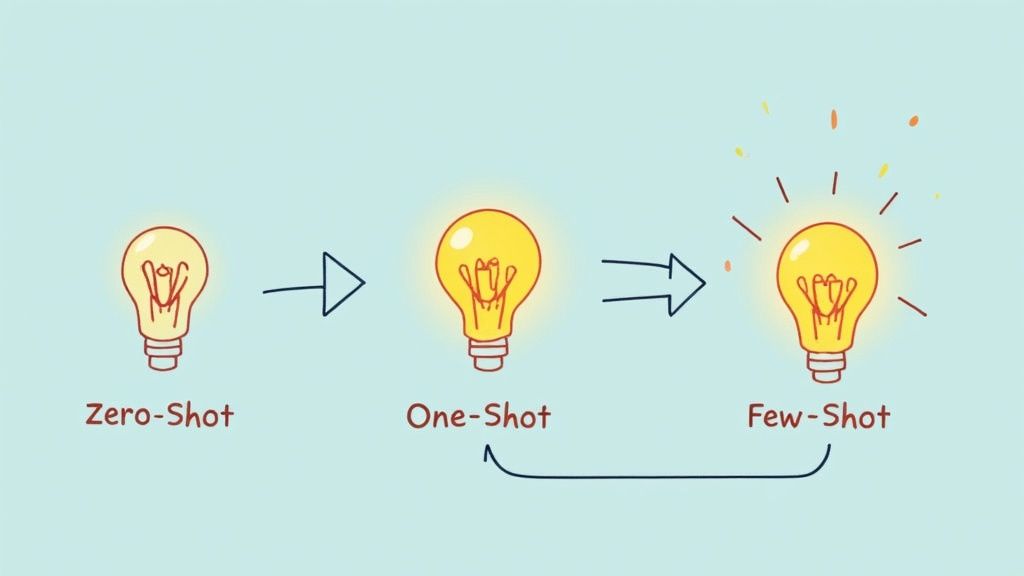

The Building Blocks: Core Prompting Methods

Let’s start with three foundational techniques. The one you choose really just depends on how much of a nudge the AI needs to get the job done right.

-

Zero-Shot Prompting: This is the most straightforward method. You just ask the AI to do something without giving it any examples. Think of it as giving a command. It works best for simple, direct requests where the model likely already knows what to do, like “summarize this article” or “translate this sentence into Spanish.”

-

One-Shot Prompting: Here, you give the AI a single, solid example of what you want. You show it an input and the corresponding output you’re looking for. This provides a clear template, making it perfect for tasks that need a specific format or style, like classifying customer reviews as “Positive” or “Negative.”

-

Few-Shot Prompting: When things get a bit more complex, you can provide a few examples—usually between two and five. This helps the AI pick up on more subtle patterns and deliver more reliable results. It’s fantastic for things like generating product descriptions that need to match a very specific brand voice.

By giving the model a few carefully chosen examples, you’re essentially coaching it in real-time. It’s one of the most powerful tools in your toolbox for getting exactly what you want.

Unlocking Complex Reasoning with Chain-of-Thought

Sometimes, a task requires several steps of logic to get to the right answer. Just asking for the final result can lead the AI astray. This is where Chain-of-Thought (CoT) prompting is a game-changer.

Instead of just asking for the answer, you instruct the AI to “think step-by-step” and walk you through its reasoning.

This simple instruction forces the model to break a big problem down into smaller, more manageable pieces. For example, if you give it a math word problem, a CoT prompt encourages it to first identify the important numbers, then figure out the right calculation, and only then produce the final answer. This dramatically improves accuracy for anything involving logic, math, or complex reasoning. For a deeper look at these methods, feel free to explore our other articles on advanced LLM prompting techniques .

A Practical Workflow Example

So, how does this all come together in the real world? Let’s imagine we’re building an AI-powered system to handle customer service emails.

-

Start with Zero-Shot: We’d begin with a simple prompt: “Write a polite reply to a customer who wants a refund.” This gives us a baseline to see how the AI naturally responds.

-

Refine with One-Shot: The first response is probably a bit generic. Now we refine it with a single example: “Input: ‘My order arrived broken.’ Output: ‘I’m so sorry to hear your order was damaged in transit. We can send a replacement right away or issue a full refund. Which would you prefer?’”

-

Scale with Few-Shot: To handle a wider range of issues, we add more examples covering late deliveries, wrong items, or billing questions. This builds a more robust and adaptable system.

-

Implement Chain-of-Thought: For a really tricky support ticket, we could tell the AI to first identify the customer’s core problem, then list possible solutions based on company policy, and finally draft a complete response incorporating that logic.

This iterative process—starting simple and layering in more guidance—is how professionals build reliable and truly effective AI solutions.

The Tools of the Trade for Professional Prompting

When you’re first getting started, tinkering with prompts in a basic AI playground like ChatGPT or Claude is a great way to learn the ropes. But once you decide to build something serious and scalable, you’ll quickly hit a wall.

Suddenly, you’re not just writing one prompt; you’re juggling dozens, maybe even hundreds. How do you keep track of which version works best? How do you stop your team from accidentally overwriting each other’s brilliant ideas? And the biggest question of all: how do you feed the AI consistent, relevant information across a complex project? A simple chat window just wasn’t built for that.

Graduating from Basic Playgrounds

This is exactly why dedicated prompt engineering platforms exist. They bring much-needed structure to the creative process, turning what feels like an art form into a disciplined, repeatable science. These tools are designed to manage the entire lifecycle of a prompt—from the first draft to testing, deployment, and ongoing tweaks.

A solid platform will usually give you a few essential features:

- Version Control: Think of it like Git for your prompts. You can save, track, and roll back to earlier versions, so you never lose good work.

- Team Collaboration: Shared spaces where your whole team can build, comment on, and perfect prompts together.

- A/B Testing: The ability to run different prompts against each other in a controlled way to see which one gets the best results.

- Context Management: A system for organizing all the background information the AI needs and injecting it into your prompts automatically.

The Next Step: Integrated Context Platforms

For developers building real software, context isn’t just a nice-to-have; it’s everything. This is where more advanced tools like the Context Engineer MCP are changing the game. They don’t just manage the text of your prompts—they integrate directly into your development environment to feed the AI the right project information at the right time.

Take a look at the Context Engineer platform, which was built to solve this exact problem.

What you’re seeing here isn’t just a place to write prompts. It’s a structured system for managing an entire AI-powered development workflow, from planning all the way to coding.

Platforms like this are the answer to scaling problems. They give you a central hub for prompt management, testing, and—most importantly—deep context integration straight from your codebase. This approach represents a huge leap forward for professionals looking to build reliable and sophisticated AI systems. In fact, many of these workflow principles are covered in our guide to the top AI coding assistant tools .

By moving to a professional toolkit, you stop just asking an AI questions and start strategically engineering its responses for predictable, high-quality results.

The Future of Human and AI Collaboration

If you think a prompt engineer just sits around all day writing the “perfect” sentence, think again. That’s already becoming an outdated view. The skillset is evolving as fast as the AI models themselves, and the role is quickly becoming much more strategic.

We’re moving away from the idea of a lone genius hand-crafting individual prompts. The future is about designing and overseeing complex, automated AI systems where the human acts more like an architect than a simple wordsmith.

The Rise of Automated Prompt Optimization

A big part of this shift is thanks to automated prompt optimization. Instead of a person spending hours tweaking a single phrase, we now have systems where AI helps fine-tune its own instructions. It’s a powerful feedback loop between human and machine.

New algorithms with names like Minimum Perturbation Prompt Optimization (MiPRO) are using statistical feedback to refine prompts automatically. The results are impressive, often boosting accuracy in zero-shot and few-shot learning by 10-20% compared to prompts written by hand. If you’re curious about how automation is busting old myths, you can dig deeper into the facts and fiction of prompt engineering .

This doesn’t mean the prompt engineer is going away. It means the job is getting bigger. It’s becoming less about the words and more about the entire architecture of the conversation.

Far from becoming obsolete, the role is elevating. The focus is shifting from simple instruction to high-level system design, ethical oversight, and ensuring AI outputs align with critical business goals.

The Prompt Engineer as a Strategic Role

So what does this new, more strategic role actually look like? The responsibilities are expanding to include things like:

- System Design: Building entire workflows where multiple AI agents work together, each guided by its own specialized set of instructions.

- Ethical Oversight: Serving as the essential human guardrail to make sure AI systems are fair, unbiased, and produce safe, reliable results.

- Goal Alignment: Taking big-picture business objectives and translating them into an effective AI strategy that actually delivers value.

You can already see this evolution in the tools being built. Platforms like the Context Engineer MCP are designed for this future. They go way beyond a simple text box, allowing you to manage the entire context and workflow of an AI-powered system. This change solidifies the prompt engineer’s place not just as a job, but as a career that’s fundamental to how we’ll work with AI for years to come.

Still Have Questions About Prompt Engineering?

As you start exploring how to talk to AI, a few questions tend to pop up again and again. Let’s tackle some of the most common ones to clear things up and give you a solid foundation.

Do I Need to Know How to Code to Get Good at This?

Absolutely not. While coding is a huge advantage if you’re building AI into an app, the real core of prompt engineering is all about logic, creativity, and communication.

Many of the best prompt engineers I’ve met have backgrounds in writing, linguistics, or even psychology—not just computer science. The most crucial skill is your ability to think through a problem and explain what you need, step by step.

Is Prompt Engineering Just a Fad That Will Disappear?

It’s definitely evolving, not disappearing. The simple act of writing a one-line prompt might become easier for everyone, but the need for strategy and expertise is only getting bigger.

The job is shifting from just writing instructions to designing complex conversations, ensuring AI acts ethically, and making sure the entire system works for a business. It’s becoming less of a task and more of a strategic role.

We’re moving past just writing the “perfect sentence.” The future is about architecting intelligent systems, and a smart human in the loop is the most important part of making AI actually work.

What’s the Best Way to Start Learning?

The best advice? Just start doing it. Jump into free tools like ChatGPT or Claude and start experimenting. See what happens when you give different instructions. Pay attention to what works, what fails, and try to figure out why.

You can also speed things up by joining online communities or following experienced folks in the field. There’s no substitute for hands-on practice.

Ready to go beyond basic prompts and build truly smart, context-aware AI solutions? Explore how Context Engineering gives you the tools to manage complex AI workflows and get rid of hallucinations for good. Learn more about building with Context Engineering .