Before you write another prompt, consider this: the market for prompt-related skills, a core component of context engineering, was valued at $380.12 billion in 2024 and is projected to soar past $505 billion in 2025. This isn’t just hype; it reflects a fundamental shift in how businesses create value with AI. The secret isn’t just what you ask an AI, but how you inform it.

Context engineering is the discipline of building an information ecosystem around an AI to ensure its outputs are accurate, relevant, and reliable. It’s the difference between hiring a genius consultant and giving them a blank notebook versus handing them a meticulously prepared briefing file on your company, your customers, and your specific goals.

Why Your AI Needs More Than Just a Prompt

Many businesses hit a wall with AI. They access a powerful model like GPT-4 , feed it a prompt, and get a generic, inconsistent, or factually incorrect response. This isn’t a failure of the AI—it’s a failure of communication. A simple prompt is like asking that new genius consultant, “How can we increase sales?” You’ll get a textbook answer, but it will be uselessly disconnected from your business reality.

This is where context engineering creates a decisive advantage. It’s the practice of designing a system that automatically provides the AI with the right information, in the right format, at the right moment. Forget isolated commands; think of it as handing a detective a complete case file. You are systematically setting the AI up for success.

The Shift from Prompts to Context

Initially, the focus in AI was on “prompt engineering”—the art of finding the perfect words to elicit a desired result. While a useful skill, it represents only one component of a much larger equation. As AI applications grow in sophistication, the focus is shifting from crafting the magic words in a single prompt to building a structured environment for the AI to operate within.

A well-structured context includes several key elements that guide the AI:

- Direct Instructions: The ground rules. Define the AI’s persona, tone, and output format.

- Relevant Data: Provide access to proprietary information—internal documents, customer conversation histories, or real-time data from your systems.

- Useful Tools: Enable the AI to interact with other software via APIs, allowing it to check inventory, book meetings, or query a database.

The critical insight is this: when an AI fails, it’s rarely because the model lacks intelligence. It’s because we failed to provide the necessary context to make a correct decision.

This evolution from simple prompts to a rich, contextual environment is a strategic leap. It’s the difference between asking a single question and having a productive, ongoing collaboration.

How Context Engineering Elevates Simple Prompts

| Aspect | Traditional Prompting | Context Engineering |

|---|---|---|

| Focus | The “magic words” in a single query. | The entire information ecosystem around the query. |

| Input | A standalone instruction or question. | Instructions, real-time data, user history, and tools. |

| Goal | Get a good answer to one specific question. | Achieve a reliable, high-quality business outcome consistently. |

| Analogy | Asking a stranger for directions. | Giving a professional driver a destination, a map, and real-time traffic data. |

Ultimately, context engineering transforms a generalist AI into a specialist that understands your specific business needs, delivering far more value than a simple question-and-answer approach ever could.

The economic incentive is massive. The market for prompt engineering—a foundational skill within context engineering—was valued at USD 380.12 billion in 2024. Projections show it rocketing to over USD 505 billion in 2025. This growth, fueled by AI adoption in healthcare, finance, and e-commerce, underscores the immense value of effective AI communication. For a detailed forecast, you can read the full research about the prompt engineering market . By mastering context, you turn your AI from a novelty into a dependable, high-impact business asset.

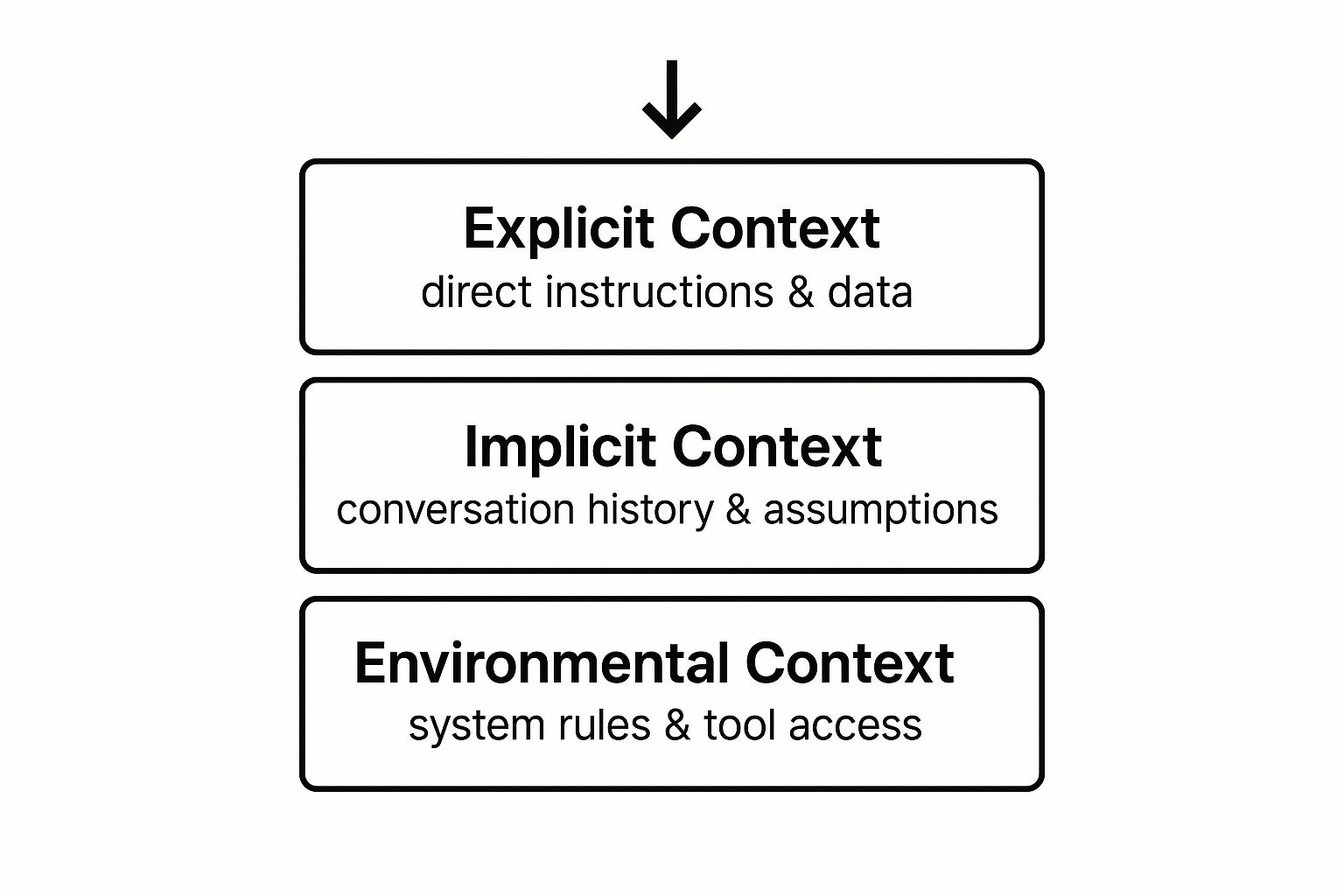

Understanding the Layers of AI Context

To implement context engineering effectively, you must understand that “context” is not a single entity but a layered information package that shapes an AI’s reasoning. When an AI produces a suboptimal response, it’s typically because one of these layers is missing or poorly defined.

Consider an AI-powered customer service agent. Its operational “world” can be broken down into three distinct layers of information.

The infographic below illustrates this hierarchy, with immediate instructions at the top and foundational rules and tools at the base.

This structure is hierarchical. Direct instructions are the tip of the iceberg; the real power and reliability come from the foundational system rules and tool access.

Explicit Context: The Direct Briefing

The top layer, Explicit Context, is the information you provide the AI for a specific, immediate task. It is the “here and now” data that sets the scene.

For our AI customer service agent, this includes:

- The verbatim customer query, e.g., “My package hasn’t arrived.”

- A specific product manual or FAQ page provided to answer the question.

- Direct instructions, such as, “Keep the tone friendly but professional.”

While this layer is easy to control, relying solely on explicit context forces the AI to start from scratch with every interaction. It lacks memory or broader awareness, making it inefficient for any complex task.

Implicit Context: The Shared Understanding

The middle layer is Implicit Context. This is the AI’s short-term, conversational memory—the shared knowledge accumulated during an interaction. It is what prevents an AI from repeatedly asking the same questions.

This is the conversational glue. Effective management of implicit context allows the AI to remember what was said moments ago, creating a fluid, human-like experience.

This context is built dynamically from the conversation. When a customer states, “My order number is 12345,” the AI must retain this information for subsequent steps. A failure here results in the frustrating chatbot loops that are a hallmark of poor implicit context management.

Environmental Context: The Operational Reality

The foundational layer is Environmental Context. This is the permanent, system-level information that defines the AI’s role, capabilities, and the rules it must follow. It is the “world” it operates within.

This is where the most impactful context engineering occurs. For our AI agent, this layer includes:

- System Prompts: A permanent set of instructions defining its identity, e.g., “You are a helpful support agent for Acme Inc.”

- Tool Access: An API connection allowing it to check real-time order status in the company’s database.

- Knowledge Bases: A connection to the entire library of company policies and technical guides, available for retrieval at any time.

Understanding how these three layers interact is the core of context engineering. It is how you transform a generic language model into a specialized, high-performing tool that understands your business and delivers tangible value.

The Core Techniques for Engineering Context

Let’s transition from theory to application. Context engineering is executed through a set of specific techniques used to guide AI behavior. These are the fundamental building blocks for creating reliable and effective AI systems.

We will analyze three of the most powerful methods.

Each technique serves a specific purpose, much like a carpenter uses a saw, hammer, and drill for different tasks. By combining them, you can build sophisticated AI applications that deliver consistent, high-quality results.

Prompt Chaining

Attempting to generate a detailed market analysis report with a single, massive prompt is a common mistake that leads to generic, unfocused, and often inaccurate outputs. The cognitive load is too high for a single step.

Prompt Chaining solves this by decomposing a large task into a sequence of smaller, interconnected prompts. Each step builds upon the output of the previous one, creating a logical workflow that guides the AI toward a complex final goal.

For the market analysis report, a chain would look like this:

- Prompt 1: “Identify the top three competitors for a productivity app targeting remote workers.” The AI performs a focused research task.

- Prompt 2: “Using the provided list of competitors, analyze the primary strengths and weaknesses of each.” The AI’s task is now clearly constrained.

- Prompt 3: “Based on the competitive analysis, write a 200-word summary outlining a key market opportunity for our new app.”

Chaining prompts ensures quality control at each stage and provides a structured path for the AI. This methodical approach dramatically improves the quality and reliability of the final output.

Retrieval-Augmented Generation (RAG)

A significant limitation of standard AI models is their static knowledge base; they are unaware of events or data created after their training cut-off date. They don’t know your latest sales figures, internal policies, or recent project updates.

Retrieval-Augmented Generation (RAG) addresses this by connecting the AI to your private, up-to-date knowledge bases.

Think of RAG as giving the AI an “open-book exam.” Before answering a query, the AI first retrieves relevant information from a designated data source—such as an internal company wiki, a product database, or a library of support tickets.

RAG grounds the AI in factual, current information, which drastically reduces the risk of “hallucination” (fabricating information). This is the bedrock for building trustworthy AI assistants that provide accurate, verifiable answers.

For example, when a customer asks a chatbot, “What is your return policy for items purchased on sale?” a RAG system first searches the company’s knowledge base for the official policy document. It then provides this verified text to the AI, which uses it to formulate a precise answer. The AI is not guessing; it is citing facts you provided.

Tool Use and Function Calling

Sometimes, an AI needs to act, not just communicate. Tool Use, also known as Function Calling, gives an AI the ability to interact with external software and systems. It transforms the AI from a conversationalist into an active participant in your workflows.

This technique allows an AI to call pre-defined functions or APIs to perform real-world tasks, moving beyond text generation to tangible action.

This capability is rapidly becoming standard. The 2025 State of Analytics Engineering Report from dbt Labs found that 70% of analytics professionals already use AI for coding assistance, and 50% use it for documentation. This demonstrates how integrating AI with existing tools—a core tenet of context engineering—is becoming an essential skill. You can discover more insights about how professionals are adopting AI from the full report .

Practical examples of Tool Use in action include:

- Scheduling a Meeting: An AI can access a calendar API to find an available slot and book a meeting.

- Updating a CRM: An AI can use a function to log call notes and update a customer record in Salesforce.

- Checking Inventory: An e-commerce bot can call an API to verify real-time product stock levels.

Mastering these techniques—Chaining, RAG, and Tool Use—provides powerful levers for controlling AI behavior. With them, you can engineer context to build systems that are not just intelligent, but also accurate, useful, and deeply integrated into your business operations.

See Context Engineering in Action

Want to see how context engineering works with real tools like Cursor, Claude Code, and Windsurf? Watch our practical tutorial demonstrating these principles in actual development workflows:

Learn how to set up the Context Engineer MCP, manage context for AI coding agents, generate comprehensive PRDs, and control information flow for consistent, reliable results.

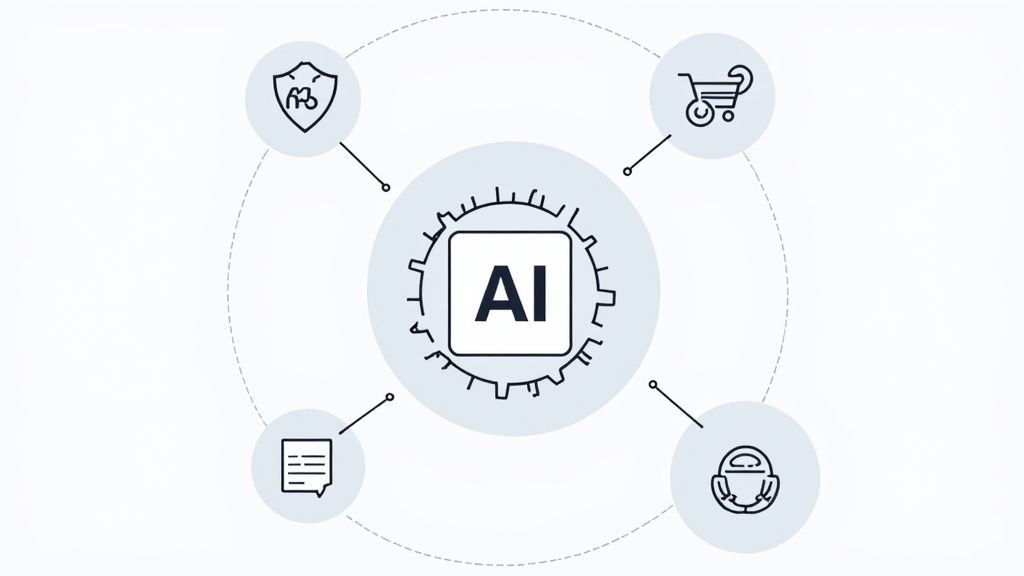

The Business Impact of Smarter AI

Implementing context engineering is not just a technical exercise; it’s a business strategy that yields measurable returns. By moving beyond simplistic prompting, your AI transforms from a novelty into a driver of growth, efficiency, and customer satisfaction. The impact is felt across four key business areas.

Whether through Retrieval-Augmented Generation (RAG) or Prompt Chaining, this disciplined approach makes AI performance predictable, reliable, and manageable, leading to tangible improvements on the balance sheet.

Drastically Improve AI Accuracy and Trust

The most immediate benefit is a significant reduction in AI “hallucinations.” When an AI fabricates information, it erodes user trust and can expose your business to risk. Techniques like RAG directly mitigate this.

By grounding the AI in your own proprietary, up-to-date information, RAG compels it to generate answers based on verified facts rather than its generalized training data. For a customer support bot, this means providing correct policy information every time. For an internal knowledge tool, it means citing the latest project documentation.

This marks a fundamental shift from probabilistic guessing to fact-based generation. When users see that the AI’s answers are accurate and verifiable, they begin to trust it. This trust drives higher adoption and more meaningful utilization of the tools you build.

Enable Complex Workflow Automation

A basic AI can answer a single question. A context-engineered AI can execute an entire multi-step business process. By combining prompt chaining and tool integration, you can build an AI agent that manages a complex workflow from start to finish, freeing up your team for higher-value work.

Consider an AI that doesn’t just draft a sales email, but instead:

- Retrieves the latest customer notes from your CRM (Tool Use).

- Selects the appropriate product one-pager from your internal library (RAG).

- Writes a personalized outreach email using that specific information (Prompt).

- Schedules a follow-up task in the sales representative’s calendar (Tool Use).

This level of automation transforms your AI from a text generator into a productive team member capable of handling routine yet complex tasks that consume significant human hours.

Unlock Hyper-Personalization at Scale

Generic, one-size-fits-all customer interactions are no longer sufficient. Context engineering empowers you to create deeply personalized experiences for every user, automatically. By providing the AI with a customer’s specific history, preferences, and recent interactions, every response can be tailored.

For example, an e-commerce AI can analyze a customer’s purchase history and browsing behavior to recommend products they will actually value. A support agent can greet a returning customer with full knowledge of their ticket history, creating a seamless and effective conversation.

The market is expanding rapidly as businesses recognize the critical nature of this capability. The AI market in North America, a hub for these practices, was valued at $51.58 billion in 2025, representing staggering growth of over 1,150% from $4.11 billion in 2018. This boom is largely driven by the demand for context-aware AI that can deliver precise, personalized results. You can find more details about the AI market size and its growth drivers in recent reports.

Personalizing at this scale provides a massive competitive advantage that directly increases customer loyalty and lifetime value.

The table below connects specific context engineering techniques to the business value they create and the metrics they improve.

Mapping Techniques to Business Value

| Technique | Business Benefit | Affected KPI |

|---|---|---|

| Retrieval-Augmented Generation (RAG) | Reduces factual errors and builds user trust. | - Reduction in support tickets - Increased user adoption rates |

| Prompt Chaining | Automates complex, multi-step workflows. | - Improved operational efficiency - Reduced manual task time |

| Tool Use & Function Calling | Enables AI to interact with other systems. | - Faster process completion - Increased team productivity |

| In-Context Learning (Few-Shot) | Creates hyper-personalized user experiences. | - Higher customer engagement - Increased customer lifetime value (CLV) |

Ultimately, each of these methods provides a clear pathway from a technical implementation to a measurable improvement in how your business runs.

How Context Engineering Works in the Real World

Theory is valuable, but seeing context engineering solve tangible business problems makes its power clear. Companies across industries are transforming general-purpose AI into specialized tools that deliver exceptional value.

Let’s examine a few real-world examples.

Healthcare Diagnostic Assistance

Problem: The volume of new medical research is overwhelming. With thousands of studies published weekly, it is impossible for clinicians to stay current. This creates a risk of diagnoses being based on outdated information.

Solution: A healthcare system implements an AI assistant using Retrieval-Augmented Generation (RAG). They connect the AI to a private, continuously updated database of medical journals, clinical trial results, and treatment protocols. When a doctor requests a summary of a specific condition, the AI first retrieves the latest research from its trusted source before generating a response.

Result: The doctor receives a summary grounded in the most current evidence. This doesn’t replace their professional judgment; it augments it. The AI acts as a tireless research assistant, helping to reduce diagnostic error and support better-informed clinical decisions.

By grounding the AI in a trusted knowledge base, context engineering turns a potential source of misinformation into a reliable tool for medical professionals. This builds the critical trust needed for adoption in high-stakes fields.

E-commerce Personalized Shopping

Problem: Large online stores can be overwhelming, leading to choice paralysis and cart abandonment. A basic FAQ chatbot is insufficient when a customer needs genuine guidance to make a purchasing decision.

Solution: An e-commerce brand develops a personalized shopping bot using Prompt Chaining and real-time customer data. The system guides the shopper through a multi-step journey:

- Discovery: It analyzes the customer’s browsing history and past purchases to suggest relevant products.

- Comparison: As the customer expresses interest, the bot retrieves product specifications and reviews to facilitate side-by-side comparisons.

- Checkout: It assists with the final purchase, answering last-minute questions about shipping or returns.

Result: The customer experience feels less like navigating a website and more like consulting with a knowledgeable expert. This streamlined journey increases conversion rates. Studies confirm that this level of personalization can boost sales by 5-15% and improve marketing ROI by 10-30%.

Software Development and Debugging

Problem: Developers spend a significant portion of their time not writing new code, but navigating existing codebases, understanding dependencies, and debugging. A generic AI assistant is ineffective because it lacks context about a specific project’s architecture.

Solution: A development team integrates an AI coding partner into its workflow using Function Calling. They provide the AI with “tools” to interact with their live codebase—it can read files, trace dependencies, and analyze the project’s architecture in real time.

Result: An engineer can now ask a highly specific question, such as, “What function is causing this error in the user authentication module?” The AI uses its tools to investigate the actual code and provide a precise, actionable answer. This dramatically accelerates development cycles, with some teams reporting that AI tools now handle over 50% of their code. The AI becomes a true collaborator, not just a sophisticated autocomplete.

Your First Steps in Context Engineering

Moving from theory to practice in context engineering is about methodical planning, not complex coding. It requires a structured approach to building reliable, accurate AI systems, saving you from the frustration of endless trial-and-error.

Think of it like building with LEGOs. A plan is essential. This same structured approach will help you build an AI system that consistently performs as intended.

Step 1: Define a Specific Goal

First, clarify your objective. Before writing a single prompt, define what success looks like. A vague goal like “improve customer support” is unactionable.

Be specific and measurable. A stronger goal is: “Build an AI assistant that answers questions about our return policy by referencing our internal knowledge base, with the objective of reducing human agent response times by 30%.”

This goal is clear. It specifies the required information (the return policy documents) and provides a concrete metric for success.

Step 2: Inventory Your Context Assets

With a clear goal, gather your materials. What information—your “context assets”—does the AI need? This is analogous to a chef’s mise en place—preparing all ingredients before cooking.

Your assets typically fall into three categories:

- Knowledge: Documents, FAQs, databases, articles. For our example, this is the official return policy document.

- Data: User-specific information, such as a customer’s order history or previous support chat logs.

- Tools: Actions the AI must perform, like a function to look up an order status in your e-commerce system via an API.

Organizing these assets creates the information repository your AI will use to provide helpful, accurate answers instead of fabricating them.

Step 3: Design the Interaction Flow

Next, architect the workflow. Map out the entire process from start to finish. How will the AI utilize the assets you’ve gathered? This is where you select the most appropriate context engineering techniques.

Think of this as storyboarding the AI’s decision-making process. By explicitly defining the sequence of operations, you transform a reactive chatbot into a proactive problem-solver.

For our support bot, the flow might be:

- User asks about returning an item.

- The system uses RAG to retrieve the most relevant section from the return policy document.

- The AI crafts an answer based exclusively on that retrieved text.

- If the user asks about a specific order, the AI uses a tool (function call) to look up their order details.

This design dictates how context is managed at each step, ensuring a logical and efficient process.

Step 4: Test and Refine Iteratively

Your first implementation will not be perfect. The final step is a continuous cycle: test, gather feedback, and refine. Run your system through numerous real-world scenarios to identify weaknesses. Where does it get confused? Is critical information missing? Is the interaction clunky?

Each test provides a learning opportunity. Use the feedback to update your context assets, adjust the interaction flow, and sharpen your prompts. This iterative refinement loop is what transforms a prototype into a robust and genuinely useful AI application.

Common Questions About Context Engineering

As you begin implementing these concepts, several common questions will likely arise. Let’s address them to build a solid foundation and avoid early pitfalls.

How Is This Different from Prompt Engineering?

This is the most frequent question, and the distinction is significant. Prompt engineering focuses on crafting the perfect single instruction for an AI. It is a specialized and valuable skill, but it is only one piece of a much larger puzzle.

A prompt engineer is like a speechwriter, perfecting the words of a single message. A context engineer is like the event planner, considering the entire experience: the stage (the system), the guest list (the data), and the agenda (the workflow). A brilliant prompt is crucial, but its effectiveness depends entirely on the surrounding context provided to the AI.

What Skills Do I Need for Context Engineering?

You do not need a Ph.D. in machine learning. The most critical skill is a systems-thinking mindset—the ability to see the entire process, not just individual components.

Success depends less on deep coding ability and more on your capacity to:

- Deconstruct a business goal into a sequence of logical steps.

- Identify and organize the necessary information—documents, data, APIs—for the AI to access.

- Empathize with the user to design a smooth and intuitive interaction flow.

The role is closer to that of an information architect than a hardcore programmer.

The primary challenge isn’t writing complex code; it’s designing an intelligent system that provides the AI with the right information at the right time. AI failures are almost always context failures, not model failures.

Do I Need to Be an Expert in a Specific AI Model?

No. The core principles—providing clear instructions, relevant data, and useful tools—are model-agnostic. These concepts are equally applicable whether you are using models from OpenAI , Anthropic , or Google .

While different models have unique characteristics and strengths, the fundamental strategies of context engineering remain constant. By mastering these core techniques, your skills will remain valuable regardless of which model you use in the future.

Ready to stop fighting with your AI and start building reliable, complex features with ease? Context Engineering connects directly to your IDE, automatically providing the precise project context AI agents need to eliminate hallucinations and build production-ready code. Get started in two minutes.