The global market for prompt engineering is projected to grow from $505 billion in 2025 to over $6.5 trillion by 2034—a staggering 32.9% compound annual growth rate. This isn’t just a niche skill; it’s the core competency for unlocking the true value of generative AI. At its heart, artificial intelligence prompt engineering is the art and science of crafting precise instructions—or prompts—to get a Large Language Model (LLM) like GPT-5 to deliver exactly what you need.

Think of it as the difference between a blurry photo and a high-resolution image. A vague prompt gets you a fuzzy, generic response. A well-engineered prompt delivers a sharp, actionable, and valuable output. Your prompt is the blueprint for the AI’s success.

What Is AI Prompt Engineering?

Fundamentally, prompt engineering is about structuring your conversation with an AI to get the best possible results. Instead of just throwing a vague question at it, you’re providing the model with a rich recipe of context, specific examples, and clear boundaries. This strategic approach turns a general-purpose AI into a specialized assistant capable of tackling complex tasks with incredible precision.

This skill has rapidly evolved from a “nice-to-have” to a business-critical function. A well-crafted prompt can be the difference between a generic, useless paragraph and a detailed, actionable insight that saves hours of work. It’s a craft that blends creativity with logic, all while trying to understand how these powerful models “think.”

The Anatomy of an Effective AI Prompt

To move beyond basic questions and start architecting powerful instructions, it helps to break down what makes a prompt work. While not every prompt requires all these elements, mastering them will dramatically boost the quality and consistency of your results. Think of them as the essential building blocks for guiding an AI.

A great prompt eliminates ambiguity. It provides clarity and direction, meaning the AI is far less likely to make incorrect assumptions or generate irrelevant content.

Below is a simple breakdown of the core components that make a prompt truly effective.

The Anatomy of an Effective AI Prompt

Component | Purpose | Simple Example |

|---|---|---|

Persona | Assigns a specific role to the AI to frame its perspective and tone. | "Act as an expert copywriter specializing in B2B tech." |

Context | Provides background information the AI needs to understand the request. | "I am writing an email to announce a new software feature." |

Task | Clearly states the specific action you want the AI to perform. | "Draft a 150-word email that highlights three key benefits." |

Constraints | Sets boundaries or rules the AI must follow in its response. | "Do not use technical jargon. The tone should be professional yet engaging." |

Examples | Offers a sample of the desired output format or style. | "Here is a sample opening line: 'Ready to simplify your workflow?'" |

By mastering these components, you give the AI a complete picture of your expectations. This leads to outputs that aren’t just good—they’re genuinely useful and ready to be put to work.

Why Prompt Engineering Is a High-Value Skill

Generative AI has created a vital discipline at the intersection of human creativity and machine intelligence. It’s not just about asking an AI a question; it’s about knowing how to ask—how to frame a request with precision to get a high-quality, useful result that solves a real problem. This is the art and science of prompt engineering, and it has rapidly become one of today’s most valuable skills.

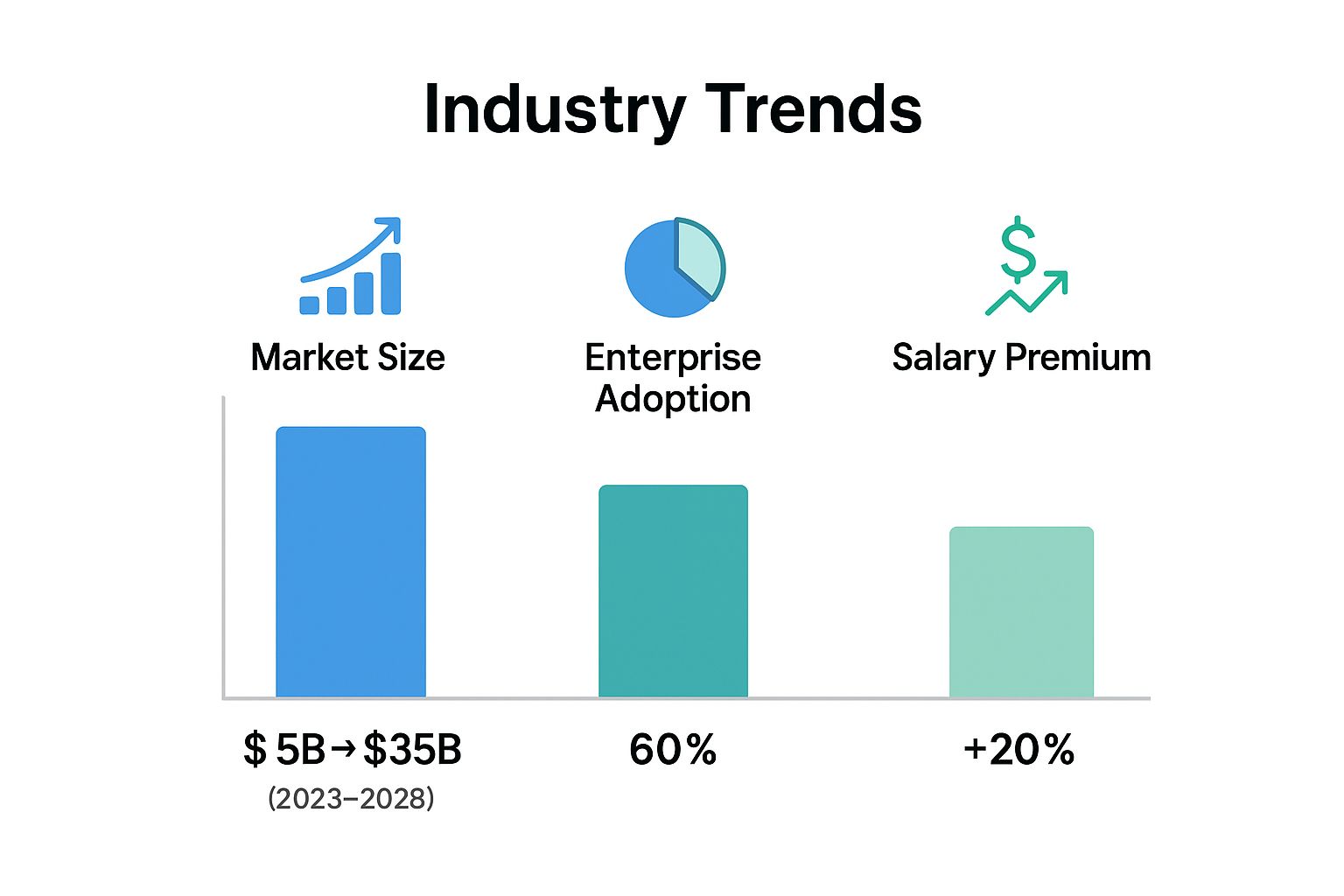

Companies are no longer just experimenting with AI; they are integrating it into core business operations. Research shows that nearly 60% of organizations are already using generative AI in at least one business function. This rapid adoption has created massive demand for professionals who can ensure these expensive AI tools deliver a positive return on investment. After all, a bad prompt leads to wasted time and generic output. A great prompt unlocks tangible value.

The Business Case for Better Prompts

When you master prompt engineering, the results are immediate. It’s the difference between inefficient trial-and-error and achieving goals faster, better, and with more creativity.

Consider its impact in a few key areas:

-

Accelerating Software Development: Instead of a developer spending hours on boilerplate code, a well-engineered prompt can generate reliable code snippets, write unit tests, or draft documentation in seconds. This frees up developers to focus on high-level architecture, drastically shortening project timelines.

-

Driving Marketing Engagement: We’ve all seen generic AI content that falls flat. With skilled prompting, marketers can guide an AI to produce copy that nails the brand voice, resonates with the target audience, and boosts engagement across emails, ads, and social media.

-

Enhancing Customer Support: A chatbot with poorly designed prompts is a source of frustration. One guided by expertly engineered prompts can understand complex issues, demonstrate empathy, and resolve customer problems efficiently, reducing the load on human agents and improving customer satisfaction.

This infographic paints a clear picture of just how significant the demand for prompt engineers has become.

As the numbers show, this isn’t a passing trend. Prompt engineering is a foundational skill with significant economic and career momentum.

A Fast-Growing Career Path

The need for prompt engineers is not confined to the tech industry; it’s emerging across all sectors as businesses build their futures around AI. The global AI industry is projected to reach $1.81 trillion by 2030, and prompt engineering is a critical driver of that growth. Tech giants like OpenAI, Google, and Microsoft are actively hiring for these roles to refine their AI models and user experiences.

This explosive growth has made prompt engineering a lucrative career path, with top-tier salaries reaching as high as $335,000. For a deeper analysis of these trends, you can explore the global job market at Refon Telelearning .

As companies scale, they quickly discover that manual, ad-hoc prompt creation is inefficient and inconsistent. This is where specialized platforms become essential. For example, a Model Context Protocol (MCP) server like Context Engineer automates and structures the process by providing AI agents with the necessary context automatically. This elevates prompting from a manual craft to a reliable, scalable engineering discipline, directly impacting the company’s bottom line.

Fundamental Prompting Techniques You Can Use Today

Let’s move from theory to application. This is where artificial intelligence prompt engineering truly comes to life. Understanding the components of a good prompt is the first step, but knowing which technique to deploy for a specific situation is what separates beginners from professionals.

Think of these methods not as rigid rules but as versatile tools in your toolkit. Each one provides the AI with a unique form of guidance, and that subtle shift can dramatically alter the quality of the output. Let’s explore the core techniques you can start using immediately.

From Zero To Hero With Shot-Based Prompting

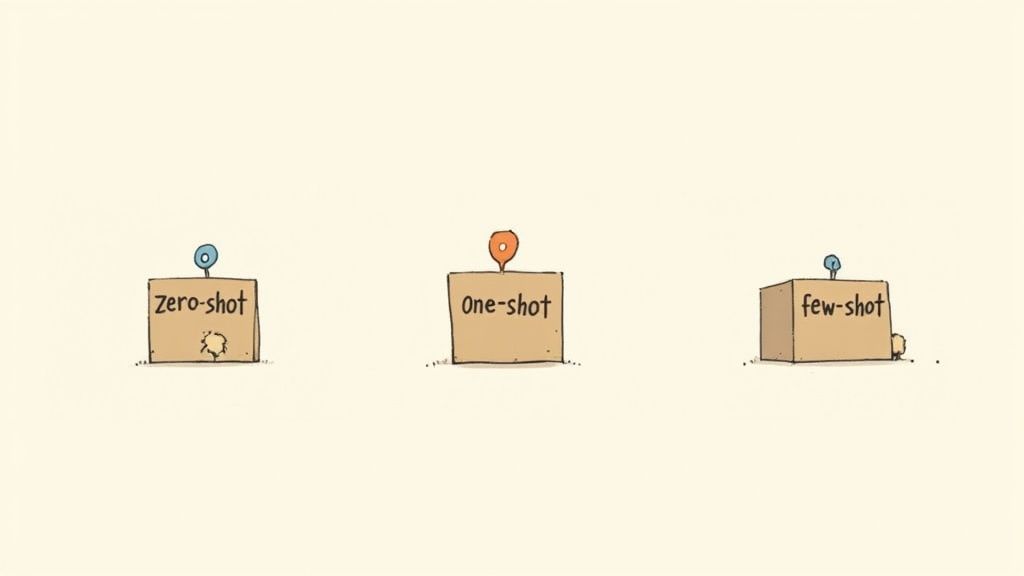

One of the most direct ways to steer an AI’s output is by controlling the number of examples you include in your prompt. This concept, known as “shot” prompting, is a foundational skill for every prompt engineer.

-

Zero-Shot Prompting: This is the most basic approach. You give the AI a direct command with zero examples, relying entirely on its pre-existing training. It’s fast and simple, but for nuanced tasks, the results can be generic or inaccurate.

-

One-Shot Prompting: Here, you provide a single, clear example of your desired output. This small piece of context is surprisingly powerful, as it shows the AI the exact format, tone, or structure you want it to replicate.

-

Few-Shot Prompting: When you provide two or more examples, you give the AI a distinct pattern to follow. This is your go-to method for complex tasks, as it minimizes guesswork and helps the model understand the type of reasoning required. In fact, studies have shown that adding just a few examples can boost task accuracy by over 50%.

Let’s illustrate this with a simple sentiment analysis task.

Example Comparison

Technique | Prompt |

|---|---|

Zero-Shot |

|

One-Shot |

|

Few-Shot |

|

See the difference? As you add examples, you’re no longer hoping the AI gets it right—you’re showing it how.

Guiding the AI with Chain-of-Thought Prompting

For any problem that involves logic, math, or multiple steps, simply asking for the final answer is a gamble. The AI might rush to a conclusion and make an error. This is where Chain-of-Thought (CoT) prompting is indispensable.

The concept is incredibly simple: instruct the AI to “think step-by-step” or “show its work.”

This small instruction forces the model to slow down and decompose the problem into smaller, manageable parts. By externalizing its reasoning process, the AI is far more likely to follow a logical path to the correct answer. It’s like telling a math student to show their work—you can see their logic, and they’re less likely to make a careless mistake.

By guiding the model to externalize its reasoning process, Chain-of-Thought prompting can improve performance on complex arithmetic, commonsense, and symbolic reasoning tasks by a significant margin.

For instance, instead of asking, “If a train leaves Station A at 2 PM traveling at 60 mph, and Station B is 150 miles away, when will it arrive?” you’d get a much more reliable result by framing it this way:

"A train leaves Station A at 2 PM traveling at 60 mph. Station B is 150 miles away. First, calculate how long the journey will take. Then, calculate the arrival time. Show your steps."

This structured approach makes errors far less likely. This technique is a building block for many advanced applications, and you can learn more about how to structure AI inputs in our deep dive into Context Engineering methodologies .

Comparing Core Prompting Techniques

This table provides a quick-reference guide for choosing the right approach for your task.

Technique | Core Idea | Best For |

|---|---|---|

Zero-Shot | Give a direct command with no examples. | Simple, well-defined tasks like summarizing a short text or answering a factual question. |

Few-Shot | Provide several examples of the desired input/output. | Complex formatting, style replication, or tasks requiring nuanced understanding. |

Chain-of-Thought | Instruct the AI to explain its reasoning step-by-step. | Math problems, logic puzzles, multi-step planning, and any task where the process matters. |

Getting comfortable with these foundational methods gives you a solid base for improving your results with any AI. They’re the first and most important step toward moving from casual questions to precise, effective artificial intelligence prompt engineering.

Advanced Strategies for Complex AI Tasks

Once you’ve mastered the basics, you can tackle more sophisticated challenges. Simple prompts are great for one-off tasks, but what about problems that require multiple steps, access to real-time information, or perfectly structured outputs? This is where artificial intelligence prompt engineering evolves from writing instructions to designing complex strategies.

These advanced methods are designed to overcome an AI’s inherent limitations. For instance, a language model cannot browse a website or query a live database on its own. Advanced frameworks essentially grant the AI new capabilities, allowing it to solve problems far beyond the scope of its training data.

Unlocking Action with the ReAct Framework

One of the most powerful patterns for this is ReAct, which stands for Reason and Act. Think of it as giving the AI a cognitive loop and a set of tools. Instead of merely generating text, the ReAct pattern guides the model through a repeating cycle of thought and action.

Here’s how it works:

-

Reason: The AI analyzes the problem and formulates a plan for what it needs to do next.

-

Act: It then selects a “tool” from a predefined toolkit—like performing a web search or calling an API—to execute its plan.

-

Observe: Finally, it processes the information obtained from the tool and uses it to inform its next step.

This loop continues until the AI has gathered all the necessary information to solve the original problem. If you ask, “What’s the current stock price for Company X and who is their CEO?”, a ReAct-powered agent recognizes it cannot answer this in one go. It reasons that it needs to perform two separate searches, executes them, and then synthesizes the results into a single, cohesive answer. This transforms the AI from a passive text generator into an active problem-solver.

Generating Structured Data and Adopting Personas

Beyond reasoning, many business applications require AI to deliver data in a clean, machine-readable format. No one wants to manually copy and paste information from a paragraph into a spreadsheet. A much smarter approach is to instruct the AI to produce structured data directly.

With a clear example and a few instructions, you can get an AI to output perfectly formatted JSON, CSV, or XML. This is incredibly practical for tasks like:

-

Extracting key details from customer support tickets into a neat table.

-

Converting unstructured product descriptions into clean JSON for a database.

-

Summarizing a dense financial report into a simple CSV for analysis.

Another powerful technique is assigning a detailed persona. A basic persona merely sets the tone (e.g., “Act like a pirate”). An advanced persona, however, provides a deep professional context that shapes the entire response.

Prompt: “You are a Senior Marketing Analyst for a B2B SaaS company that sells cybersecurity solutions. Your target audience is CISOs at Fortune 500 companies. Your tone must be authoritative, data-driven, and focused on ROI and risk mitigation.”

This level of detail makes a world of difference. The AI isn’t just playing a role; it’s adopting a complete professional identity, which guides every single word it generates. If you want to dive deeper, our library of LLM techniques has plenty more examples.

As you begin combining these techniques—blending complex personas with multi-step reasoning and structured outputs—managing it all in a simple text file becomes untenable. Keeping the context, documents, and instructions organized is not just difficult; it’s a recipe for inconsistency and failure.

This is precisely why dedicated platforms are crucial. Tools like the Context Engineer MCP are built to manage this complexity. Instead of juggling disparate text files, you gain a structured environment to build, test, and deploy sophisticated prompts at scale. It’s how you ensure your most demanding AI applications are consistent, reliable, and maintainable.

What’s Next for Prompting and AI Automation?

The field of prompt engineering is evolving at an incredible pace. Initially, the focus was on meticulously hand-crafting the perfect instruction. Now, we are witnessing a significant shift toward smarter, automated systems where the AI actively participates in improving its own prompts.

This evolution is driven by the need for scale. As businesses integrate AI into larger, more complex workflows, manually writing and testing every prompt becomes a major bottleneck. The future lies in building systems that can self-optimize, learn from past performance, and even generate novel prompts to address new challenges.

The Dawn of Self-Optimizing Prompts

The next major leap is enabling AI models to refine prompts autonomously. Imagine a system that analyzes vast datasets of prompt-response pairs, identifying the patterns that lead to successful outcomes. It learns which phrasing is most effective, what context is critical, and how to structure a request for maximum accuracy.

This creates a powerful feedback loop that continuously improves over time:

-

Smarter Prompts: The AI begins generating more effective instructions.

-

Better Outputs: These improved prompts lead to more accurate and useful AI responses.

-

More Learning Data: The successful interactions provide high-quality examples for the next iteration of learning.

This type of automated refinement is key to building AI agents that can adapt in real-time and perform reliably without constant human supervision.

Market Growth and Economic Impact

This transition from manual to automated prompting is not just a technical trend; it’s fueling a massive economic shift. The demand for efficient, scalable AI solutions is causing the prompt engineering market to explode.

As of 2025, the global prompt engineering market is valued at approximately $505 billion. It is projected to skyrocket to $6.53 trillion by 2034, reflecting a compound annual growth rate (CAGR) of nearly 32.9%. This incredible expansion is driven by breakthroughs in generative AI and the push for digitalization across every industry, from healthcare and finance to software development. The AI’s ability to self-optimize prompts is a huge part of this growth. You can read more about the vital role of prompt engineering in AI-driven marketing to see it in action.

This explosive growth makes one thing clear: mastering prompt engineering isn’t just about learning a new skill. It’s an investment in a fundamental capability that will shape the future of business and technology.

Automation Needs a Solid Foundation

To achieve this level of automation, we must move beyond simple text boxes. When your prompt strategies involve multiple techniques, external data sources, and complex decision-making, a structured environment is essential for management. This is where dedicated platforms demonstrate their value.

For teams building serious AI applications, a tool like the Context Engineer MCP provides the necessary framework to handle this complexity. Instead of a person manually feeding the AI context for every request, the platform can automatically supply the correct background information, architectural details, or project-specific knowledge. It transforms prompt engineering from a craft into a systematic, scalable discipline. This is how we achieve true AI automation, where an AI can handle complex jobs with minimal human guidance. The future is less about one-off commands and more about engineering intelligent, autonomous systems.

Putting Your Prompt Engineering Skills into Practice

Let’s bring this theory into the real world. Mastering prompt engineering is less like learning a technical skill and more like developing a craft. It’s a blend of art and science—combining creative thinking, logical precision, and iterative refinement.

This is where you transition from someone who merely uses AI to someone who actively directs it. You become the conductor, guiding the model to produce the exact output you envision. The core principle is simple: the quality of the output is a direct reflection of the quality of the input.

Your Prompting Best Practices Checklist

As you begin crafting your own prompts, keep these fundamental principles in mind. Think of this as your pre-flight checklist for every AI interaction.

-

Be Specific and Direct: Avoid ambiguity. Clearly state what you want. Vague instructions lead to vague results.

-

Provide Rich Context: Give the model the “why” behind your request. Background information helps it understand your true intent and fill in the gaps correctly.

-

Use Examples (Few-Shot Prompting): Show, don’t just tell. A couple of solid examples of the desired output are often more effective than a lengthy description.

-

Assign a Persona: Tell the AI who to be. “Act as a skeptical financial analyst” or “You are a friendly, encouraging personal trainer” will fundamentally change the tone and focus of the response.

-

Iterate and Refine: Your first attempt is rarely perfect. Analyze the AI’s output, identify what went wrong (or what could be improved), and tweak your prompt accordingly. This iterative process is the most critical step to mastery.

Once you start applying these techniques to more than simple Q&A, you’ll see how a structured approach pays off. For instance, when you’re trying to build something complex like an AI coding assistant tool , managing all the different prompts and context by hand gets messy, fast.

This is exactly why platforms like the Context Engineer MCP exist. They provide a structured environment to build, test, and fine-tune your prompting strategies in an organized and scalable way. It helps you manage complexity so your AI agents can perform reliably every time.

Now, it’s your turn. Take these concepts and start building. The only way to truly learn is by doing.

Still Have Questions About AI Prompt Engineering?

You’re not alone. As this field explodes, it’s natural to have questions. Here are answers to some of the most common ones, breaking down the essentials to give you a clearer picture.

Is Prompt Engineering a Real Career?

Absolutely, and it’s booming. Prompt engineering has rapidly emerged as a legitimate, high-demand career. Companies across technology, finance, marketing, and creative industries are actively hiring skilled prompt engineers to maximize the value of their AI investments.

These roles are not just about writing simple questions; they involve designing, testing, and optimizing complex instruction sets that drive everything from customer service bots to AI-driven content pipelines. Don’t be surprised to see salaries for senior prompt engineers surpassing those of traditional software engineering roles—the specialized skill set is that valuable.

Do I Need to Know How to Code?

Not necessarily to get started, but it provides a significant advantage. At its core, much of prompt engineering revolves around language, logic, and creative problem-solving. You can achieve impressive results without writing a single line of code.

However, when you want to build robust, automated systems, coding skills become essential. Connecting an AI to a company’s database via an API, automating prompt variations, or engineering complex agentic workflows requires technical proficiency. A combination of linguistic and technical skills is the ideal profile for an advanced prompt engineer.

How Is This Different From Just Talking to an AI?

Think of it this way: anyone can ask an AI a question, but a prompt engineer crafts a precise instruction. A casual chat will yield a generic, surface-level answer. AI prompt engineering, on the other hand, is a discipline focused on achieving specific, reliable, and high-quality results consistently.

It’s the difference between asking a friend “what’s a good car?” and providing a mechanic with a detailed specification sheet for a custom build. We use structured methods like few-shot examples and Chain-of-Thought prompting to guide the AI’s logic, then iterate and refine until the output meets exacting standards.

What’s the Best Way to Start Learning?

Jump in and get your hands dirty. The most effective way to learn is by doing. Choose a powerful model like GPT-4 or Claude and start experimenting with the techniques covered in this guide. Provide clear context, use examples, define constraints, and assign personas.

Observe how minor changes in your wording can completely alter the outcome. This hands-on experimentation is where the real learning happens. Once you’re comfortable with the basics, consider exploring platforms designed specifically for this purpose. A structured tool can make building and testing complex prompts far more efficient and manageable.

Ready to go beyond simple prompts and build something powerful and scalable? Context Engineering offers the professional framework you need to design, test, and launch sophisticated AI agents with confidence. See how our MCP can bring structure to your projects at https://contextengineering.ai .